Contents

- Introduction

- Overview

- Configuring Time Travel

- Querying/cloning historical data/objects

- Undropping historical objects

- Conclusion

- References

Introduction

Snowflake is a cloud-based data warehouse similar to others such as Amazon Redshift and Google BigQuery. However, Snowflake differentiates itself quite a bit by supporting multi-cloud configurations, separation of storage and compute, fine-grained role-based access controls among many other data security features, and temporal data access.

In this post, I provide an overview of Time Travel, Snowflake’s feature that enables temporal data access, and highlight some affordances of the feature.

Overview

As mentioned, Time Travel is Snowflake’s feature that enables temporal data access. Users can access historical data that’s up to 90 days old starting with Snowflake’s Enterprise edition. The lowest edition in their tiered offering is the Standard edition, which allows access to 1-day old historical data.

Everyone gets to Time Travel! You get to Time Travel!

We all get to take advantage of this feature to do things like query historical data, create point-in-time snapshots of our data, and recover from accidental data loss.

Time Travel is enabled by default for all Snowflake accounts, but the DATA_RETENTION_TIME_IN_DAYS parameter that controls how far back we can access data is initialized at 1. Let’s take a moment to discuss how we can change the default Time Travel configuration.

Configuring Time Travel

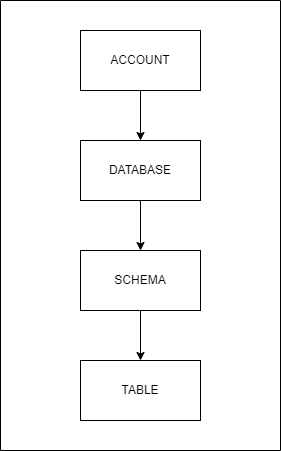

Consider the image below. It shows a linear top-down hierarchy of the Snowflake account, database, schema, and table objects.

The DATA_RETENTION_TIME_IN_DAYS parameter can be configured for each of these objects using an ALTER statement.

ALTER [ACCOUNT|DATABASE|SCHEMA|TABLE]

SET DATA_RETENTION_TIME_IN_DAYS = <value>;

By default, the DATA_RETENTION_TIME_IN_DAYS parameter is set to 1 at the account level, and its value is inherited by the objects lower in the hierarchy that do not have the parameter set for itself or an intermediate ancestor object. The principle is that an object’s data retention period is that of the one explicitly set on it, and if not set, inherited from its nearest ancestor.

If you are ever not sure what the parameter configuration for a Snowflake object is, you can use the SHOW PARAMETERS statement.

SHOW PARAMETERS FOR [ACCOUNT|DATABASE|SCHEMA|TABLE] <object_name>;

It’s worth noting that there are different types of tables such as temporary, transient, and permanent and the allowable data retention values vary by type.

Temporary and transient tables can have a data retention value of 0 or 1, while permanent tables may have a value ranging from 0 to 1 (Standard edition) or 90 (Enterprise edition and beyond). Snowflake provides a summary of this information as a table in their documentation.

Querying/cloning historical data/objects

Once we have configured the DATA_RETENTION_TIME_IN_DAYS parameter for objects in our Snowflake account and have some data stored, we can start writing queries that fetch historical data or clone historical objects. We can write time sensitive SELECT queries as well as CREATE…CLONE queries by using the AT or BEFORE clause.

The clauses are appropriately named as they convey precisely what they do. We can query historical data from an object as it was at an exact point in time or right before a point in time. The value we provide to the AT or BEFORE clauses may be a timestamp, offset from the present moment in seconds, or statement ID. These queries will fail, however, if we provide values that exceed the data retention period for an object or any of its children.

SELECT queries

The structure of a SELECT query with the AT or BEFORE clause is as follows.

SELECT *

FROM <table>

[AT|BEFORE]([TIMESTAMP|OFFSET|STATEMENT] => <value>);

Here’s an example showing a how we might query historical data from a table at the point in time before a query with the specified ID was executed.

SELECT name, email

FROM users

BEFORE(STATEMENT => '82b3fd4z-3598-255d-116q-fc931111z00r');

CREATE…CLONE queries

The structure of a CREATE…CLONE query with the AT or BEFORE clause is like this.

CREATE [DATABASE|SCHEMA|TABLE] <new_object_name>

CLONE <existing_object_name>

[AT|BEFORE]([TIMESTAMP|OFFSET|STATEMENT] => <value>);

We can use the following query to clone a table as it was 3 days ago.

CREATE TABLE users_3days_ago

CLONE users

AT(OFFSET => -60*60*24*3);

The Snowflake documentation has a few more examples if you need more to get started.

Undropping historical objects

We all make mistakes. We hope these mistakes don’t include things like dropping database objects that people and systems rely on, but in the event this does happen, Snowflake allows us to hit undo with the UNDROP statement that allows for the most recent version of a dropped database, schema, or table to be restored.

DROP [DATABASE|SCHEMA|TABLE] <object_name>; -- OOPS!

UNDROP [DATABASE|SCHEMA|TABLE] <object_name>; -- I'M BACK!

Dropped objects stick around in the system for the amount of time for which Time Travel is configured for a given object.

Additionally, objects can only be restored in the current database or schema so it’s a good practice to specify the database or schema to use before running the UNDROP statement. This is necessary even if an object’s fully-qualified name is used.

-- Undrop a database

UNDROP DATABASE <database_name>;-- Undrop a schema

USE DATABASE <database_name>;

UNDROP SCHEMA <schema_name>;-- Undrop a table

USE DATABASE <database_name>;

USE SCHEMA <schema_name>;

UNDROP TABLE <table_name>;

If you’ve somehow managed to dig further into your mistake and not only dropped a database object but also created a substandard replacement with the same name, you can still recover the dropped object. However, you will have to rename the substandard replacement before undropping the original object.

ALTER [DATABASE|SCHEMA|TABLE] <object_name> RENAME <another_name>;

/*

* ... appropriate USE statements as previously shown

*/

UNDROP [DATABASE|SCHEMA|TABLE] <object_name>;

Allowing users to recover from errors and mistakes is an essential design principle and Snowflake nailed it!

You can read more details about restoring dropped database objects in the Snowflake documentation.

Conclusion

In this post, I provided an overview of Snowflake’s Time Travel feature that allows users to query historical data. I also showed how to configure Time Travel using the DATA_RETENTION_TIME_IN_DAYS parameter on Snowflake account, database, schema, and table objects. SELECT, CREATE…CLONE, and UNDROP statements that leverage Time Travel capabilities were also highlighted.

While everyone gets to time travel, some of us will be able to time travel further into the past than others due to our subscription tier or storage cost constraints. Check out the Snowflake documentation for details on how storage costs are affected by Time Travel configurations.

References

- Snowflake. Snowflake Time Travel & Fail-safe

- Snowflake. Storage Costs for Time Travel and Fail-safe

- Snowflake. Understanding & Using Time Travel

Akava would love to help your organization adapt, evolve and innovate your modernization initiatives. If you’re looking to discuss, strategize or implement any of these processes, reach out to [email protected] and reference this post.