Table of Contents

How to build a Model Context Protocol (MCP) for extending LLM capabilities with custom tools and resources.

Often, when using AI, we aim to minimize distractions from the vast information on the internet and focus instead on a specific workspace or a defined set of data.

Consider this scenario: you've recently started working at a new company. The best way to familiarize yourself with the organization is by referring to its knowledge base. To effectively carry out your initial tasks, you need reliable information from that knowledge base.

Similarly, when working with large language models (LLMs), it's essential to have a dependable source of truth, especially when dealing with data or information that isn't publicly available.

This is where MCP comes into play.

In this article, we will explore MCP, understand how it functions, and even attempt to build one from scratch.

What is MCP?

The Model Context Protocol (MCP) is an open protocol aimed at providing a consistent way to expose AI agents to specific data and services as context.

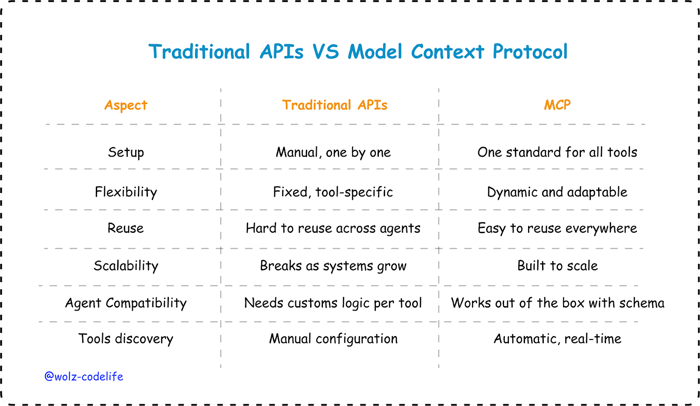

For AI agents to perform effectively in real-life applications, they require the appropriate context. Before the introduction of MCP, developers had to create custom APIs to provide specialized plugins for each data source, which was difficult to scale. With MCP, it's as simple as plug and play.

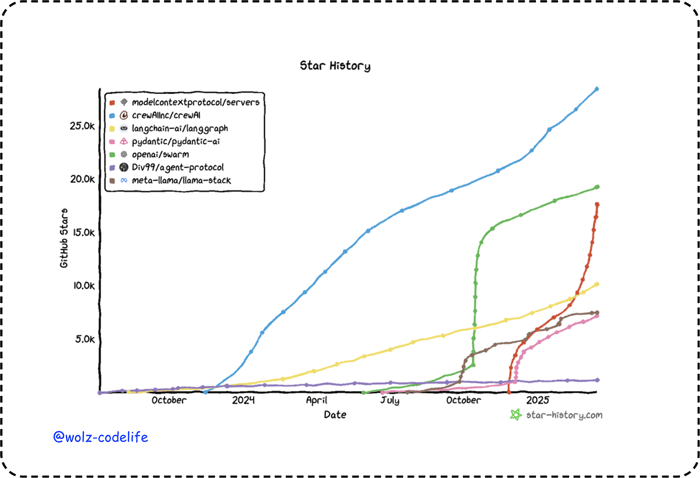

Anthropic first introduced the concept of MCP in November 2024, but the initial response was lukewarm. However, MCP is currently gaining popularity, having already surpassed Langchain and showing promise in potentially overtaking OpenAI soon. Major players in the AI industry, along with open-source communities, are rallying around MCP, viewing it as a potential game-changer for developing agentic AI systems. So, why is it gaining traction now?

There are a couple of reasons why MCP is trending:

AI agent integration: In 2023-2024, AI agents and agentic workflows became significant buzzwords; however, integrating these agents into real-life data and systems proved to be challenging to scale. MCP addresses these problems by offering a solution to connect existing data sources, such as files, databases, and APIs, to AI workflows.

As highlighted in Forbes, MCP represents a significant advancement in the functionality of AI agents. Rather than simply responding to questions, these agents can now execute valuable multi-step tasks, such as retrieving data, summarizing documents, or saving content to a file.Community and Adoptions: MCP has evolved significantly from just an idea into a full-fledged ecosystem. Early adopters who began integrating MCP into their systems have initiated a movement to standardize the operation of AI agents for the future. By February 2025, more than 1,000 open-source MCP servers have been created and integrated by major industry players, including:

Block: Using MCP to hook up internal tools and knowledge sources to AI agents.

Replit: Integrated MCP so agents can read and write code across files, terminals, and projects.

Apollo: Using MCP to let AI pull from structured data sources.

Sourcegraph and Codeium: Plugging it into dev workflows for smarter code assistance.

Microsoft Copilot Studio now supports MCP too, making it easier for non-developers to connect AI to data and tools, no coding required.

How Does MCP Work?

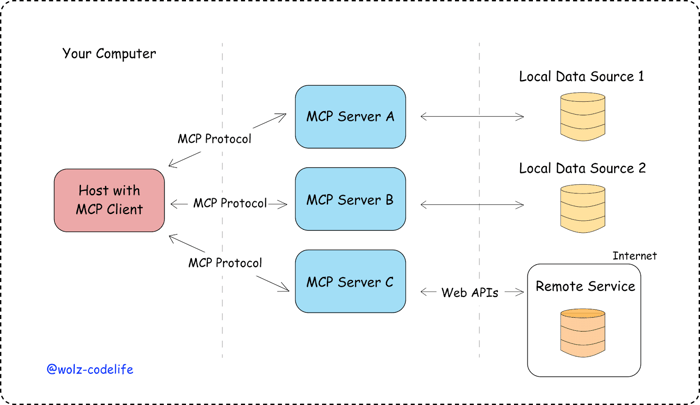

MCP uses a client-server architecture with 3 major components:

MCP Host: The AI agent. For example, Claude Desktop, an IDE, or another tool that wants to access data through the MCP

MCP Clients: Protocol within the host application that maintains 1:1 connection with servers

MCP Servers: Lightweight programs(microservices) that access local resources (like a file system or database on your computer) and provide specific context data to the AI agent.

Data Sources: The actual tools or data sources that the MCP servers can securely access for the AI agent to use. These could be:

Local Data Sources: files on your device, a folder, an app running locally.

Remote Services: External systems available over the internet (e.g., through APIs) like cloud databases, SaaS tools, web APIsYour computer’s files, databases, and services that MCP servers can connect to.

Just like traditional APIs, this protocol uses JSON-RPC 2.0 messages to establish communications between its components(Host, Clients and Servers).

MCP takes some inspiration from the Language Server Protocol as a standard for supporting programming languages across a whole ecosystem of development tools, only that in this case, MCP standardizes how to integrate additional context across AI ecosystems.

Building Your Own MCP

Building your own Model Context Protocol (MCP) server allows you to create custom tools and resources that large language models (LLMs) like Claude can interact with, enabling dynamic and extensible AI applications. The official MCP documentation from Anthropic, which is open-source, provides comprehensive specifications and SDKs for various programming languages. In this article, we'll walk through building a simple MCP server using Python, leveraging the mcp package's FastMCP class to create a tool for executing Python code in a sandboxed environment and a resource for retrieving stored code snippets, showcasing practical use cases for LLMs.

Prerequisites

Ensure you have Python 3.10 or higher installed. We recommend using uv for project management, but pip works too. Set up a new project and install the MCP SDK:

uv init mcp-code-server

cd mcp-code-server

uv add "mcp[cli]"Or with pip:

pip install "mcp[cli]"Creating a Code Execution MCP Server

We'll build an MCP server that provides:

A tool to execute Python code in a sandboxed environment using subprocess.

A resource to retrieve predefined code snippets from a dictionary (simulating a repository).

The FastMCP class simplifies server creation by managing connections and ensuring protocol compliance. Below is a complete Python script (code_server.py) that implements these features.

from mcp.server.fastmcp import FastMCP

import subprocess

# Initialize the MCP server

mcp = FastMCP("CodeServer")

# Simulated code snippet repository

CODE_SNIPPETS = {

"hello_world": 'print("Hello, World!")',

"fibonacci": '''

def fibonacci(n):

a, b = 0, 1

for _ in range(n):

yield a

a, b = b, a + b

print(list(fibonacci(10)))

'''

}

# Define a tool for executing Python code

@mcp.tool()

def execute_code(code: str) -> str:

"""Execute Python code in a sandboxed environment with a 10-second timeout"""

try:

result = subprocess.run(

["python", "-c", code],

capture_output=True,

text=True,

timeout=10

)

return result.stdout if result.stdout else result.stderr or "No output"

except subprocess.TimeoutExpired:

return "Error: Code execution timed out after 10 seconds"

except Exception as e:

return f"Error: {str(e)}"

# Define a resource for retrieving code snippets

@mcp.resource("snippet://{snippet_id}")

def get_code_snippet(snippet_id: str) -> str:

"""Retrieve a predefined Python code snippet by ID"""

return CODE_SNIPPETS.get(snippet_id, "Error: Snippet not found")The MCP server uses the FastMCP class for easy setup under the MCP protocol, initialized as "CodeServer" with standard input/output communication. This enables integration with clients like Claude for Desktop while abstracting low-level details, allowing developers to define functionality more easily.

It features a tool called execute_code, decorated with @mcp.tool(), which runs Python code in a sandboxed environment via subprocess.run, capturing output and errors. It has a 10-second timeout to prevent long-running scripts, returning execution results or error messages. This allows LLMs to dynamically execute user-provided scripts for testing or calculations.

Additionally, the server includes a resource called get_code_snippet, defined with @mcp.resource("snippet://{snippet_id}"), which retrieves Python code snippets from a CODE_SNIPPETS dictionary using a URI pattern. When requested, it returns the snippet or an error for invalid IDs, ideal for code browsing.

The server runs with mcp.run(transport="stdio"), listening for requests and processing tool executions and resource queries, ensuring accessibility and extensibility for future development.

Testing the Server with Claude for Desktop

Test your server in development mode using the MCP Inspector:

uv run code_server.pyThis command launches the MCP Inspector, allowing you to interact with the execute_code tool and get_code_snippet resource. For example, you can retrieve the hello_world snippet or execute a simple script like print(2 + 2).

Ensure Claude for Desktop is updated, and add the server to claude_desktop_config.json as described in the MCP documentation. For example:

{

"mcpServers": {

"weather": {

"command": "uv",

"args": [

"--directory",

"/ABSOLUTE/PATH/TO/PARENT/FOLDER/CODE_SERVER",

"run",

"code_server.py"

]

}

}

}Restart Claude for Desktop to use the server. You can then ask Claude to execute code (e.g., "Run a Python script that calculates 5 + 3") or retrieve a snippet (e.g., "Show me the Fibonacci snippet").

Security Considerations

Sandboxing: The execute_code tool uses subprocess.run with a timeout to mitigate risks, but for production, consider additional sandboxing (e.g., Docker) to prevent malicious code execution.

Input Validation: The example is basic; in practice, validate snippet_id and code inputs to avoid security vulnerabilities.

Error Handling: The tool handles timeouts and exceptions, but you may want to add restrictions on allowed modules or commands.

Extending the Server

To enhance this server, consider:

Adding more tools, such as code linting or debugging (see prompts in the documentation).

Expanding the resource to read snippets from a file or database.

Using Streamable HTTP transport for production (see Streamable HTTP Transport).

Refer to the MCP documentation for advanced features and examples.

What Next?

As you’ve seen, the Model Context Protocol is more than just a specification—it’s a powerful framework for extending LLMs into custom toolchains, data pipelines, and interactive assistants.

In this article, we explored how MCP serves as a bridge between LLMs and specific workspaces, such as a company’s knowledge base, ensuring accurate and targeted responses. We delved into its functionality, from defining tools and resources to handling communication via transports like stdio, and walked through building a Python-based MCP server using the FastMCP class. Our example demonstrated a practical application—executing Python code and retrieving code snippets—showcasing how MCP can empower LLMs to perform dynamic tasks securely.

Moving forward, you can expand your MCP journey by experimenting with more complex tools, such as database queries or API integrations, as shown in the MCP documentation. Try enhancing the code execution server with additional features like code linting, authentication, or Streamable HTTP transport for production use. You can also explore building MCP clients to interact with your server or integrate it with Claude for Desktop for real-world testing. The open-source MCP SDK and community resources provide a wealth of examples and support to fuel your innovation.

Akava would love to help your organization adapt, evolve and innovate your modernization initiatives. If you’re looking to discuss, strategize or implement any of these processes, reach out to [email protected] and reference this post.