In October 2022, Vercel released version 13 of their web application framework Next.js. In this version, they introduced the first beta of their new app router. It is an alternative to the existing router of Next.js and supports features like streaming and React server components. It also streamlines the definition of API and page routes and will probably become the new default way to build Next.js apps.

With Edge Functions, Vercel also added a new runtime to their cloud offerings. This comes with some benefits over Node.js or serverless function deployments and some constraints. So, it’s worth checking out how to get the app router and Edge Functions working.

This article will explain how to define APIs and pages with the new app router, deploy them to Verce Edge Functions, and what issues you should look out for.

Why do you want to use Vercel Edge Functions?

Vercel Edge Functions are an alterantive to Vercel (serverless) Functions.

They don’t come with cold-start delays, making them more suitable for high-frequency workloads. So, an Edge Function might be better suited if the cold-start times of 250ms are too much for your use case.

Lower cold-start times also allow your functions to scale up much faster. This way, they can handle smaller traffic spikes better than serverless functions.

Last but not least, Edge Functions are deployed on Cloudflare Edge Network, so they are geographically closer to your users, lowering function latency quite a bit.

Prerequisites

To follow this guide, you must install Node.js and sign up for a Vercel account.

Creating a Next.js app with the new app router

To create a Next.js App that uses the app router, you need to run the following command:

$ npx create-next-app@latest

Ensure that you answer the following questions like this:

✔ What is your project named? … my-app

✔ Would you like to use TypeScript with this project? … Yes

✔ Would you like to use ESLint with this project? … Yes

✔ Would you like to use Tailwind CSS with this project? … No

✔ Would you like to use src/ directory with this project? … No

✔ Would you like to use the experimental app/ directory with this project? … Yes

✔ What import alias would you like configured? … @/*

After successfully executing this command, you’ll have a new my-app directory for your Next.js project.

Creating an API route

You create API endpoints via route.ts files. The app router uses directory-based routing, so every directory under your project's "my-app/app" directory containing a route.ts file will become a route handled by an API endpoint. This convention means you no longer need a dedicated API directory; your API routes can live alongside the pages of your application.

Instead of "my-app/pages/api/hello.ts", you now have "my-app/api/hello/route.ts". The API directory is optional, but you can use one to group your API routes.

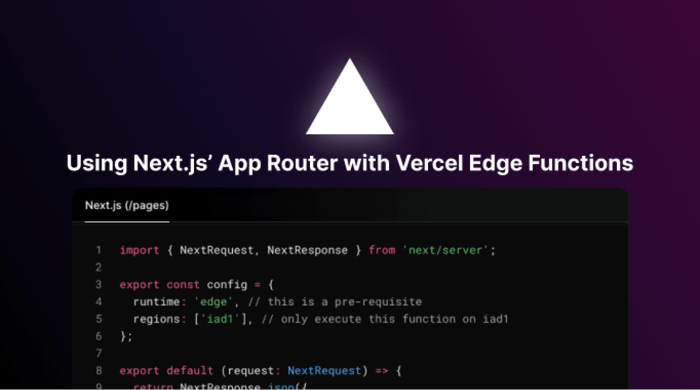

If you open "my-app/app/api/hello/route.ts", you’ll notice that the definition for routes also changed.

API handler with the default router:

export default async function handler(request: Request) {

if (req.method === 'GET') return new Response("Hello!")

}

API handler with the app router:

export async function GET(request: Request) {

return new Response("Hello!")

}

Instead of one handler function, you must export a function for every HTTP method you want to accept; in this case, the route only takes GET requests.

Fetching remote data

If you want to send a request inside your function, you must use the global fetch function.

export async function GET(request: Request) {

const text = await fetch("https://example.com", {

cache: "no-store",

}).then((r) => r.text())

return new Response(text)

}

The crucial part in this example is the cache: "no-store" option. By default, Next.js will cache all fetch requests, which can lead to confusion, especially with libraries that use fetch in the background to do their work. Usually, they call fetch without that option and are unaware of the caching.

Streaming responses

A nice feature of the new app router is its ability to stream data to the client. It’s not an all-or-nothing anymore.

Suppose you’re using the NDJSON format, where you can deliver multiple JSON objects in one response but delimited by new-line characters. In that case, your clients can parse each object when a new line arrives and don’t have to wait for the complete response to finish.

Check out this example that delivers 10 JSON objects spaced by 1 second in one HTTP response:

export async function GET(request: Request) {

const readable = new ReadableStream({

async start(stream) {

const encoder = new TextEncoder()

for (let i = 1; i <= 10; i++) {

await new Promise((r) => setTimeout(r, 1000))

stream.enqueue(

encoder.encode(`{"id": ${i}, "data": "${Math.random()}"}\n`)

)

}

stream.close()

},

})

return new Response(readable, {

headers: { "content-type": "application/x-ndjson" },

})

}

Enabling the edge runtime

Now, to ensure that Vercel will deploy your API to Edge Functions, you have to add the following line of code to that file:

export const runtime = "experimental-edge"

Right now, Vercel doesn’t consider Edge Functions production ready for the app router, but once they stabilize, the experimental flag will probably become just "edge".

Edge Function constraints

While Edge Functions have low latency and fast startup times, some limitations exist.

No Node.js environment

Edge Functions don’t run in Node.js, so Node.js-specific functionality like the path package or __dirname isn’t available. The same goes for eval and the Function constructor. If your use case requires any of these features, you must use the (default) serverless runtime.

You can generally assume the Edge Function environment is much closer to a browser environment than a Node.js environment. As seen above, loading remote data is done via the fetch function, not the HTTP package, as you would do in Node.js. You can find supported APIs in the Vercel docs.

If you want to use an NPM package that expects Node.js, you might need some polyfills to get going.

Resource Limits

The available resources aren’t endless.

-

Vercel terminates your function if it uses more than 128MB of memory

-

On the Hobby plan, you can only deploy 1MB of code, 2MB on the Pro plan, and 4MB on the Enterprise plan

-

Each environment variable can only be 5KB and together can’t exceed 64KB

-

Requests to an Edge Function have limitations too

-

Each request can only have 64 headers, and each of them is limited to 16KB

-

4MB body size

-

14KB URL length

-

-

Each function invocation can only issue 950 fetch requests

-

Date.now() will only return a new value when an IO happened

Execution Time Limits

While execution time on Edge Functions isn’t limited, you have 30 seconds after receiving a request to send a response, which can be a regular or streaming response.

Since the new app router supports streaming responses, it’s well-suited for Edge Functions needing more time to complete their work. Start streaming immediately to satisfy the 30 seconds constraint, start the work that takes longer, and then enqueue results when they come in.

Next.js’ app router and Vercel Edge Functions are a powerful team

Edge Functions start instantly, and you can deploy them near your users, making them well-suited for computations that only take a few milliseconds to execute.

The new app router gives us more flexibility in structuring your code. You can lower latency even more with features like streaming responses by providing clients with data before finishing the work. Streaming also makes the app router a good fit for Edge Functions when the processing time exceeds 30 seconds.

Akava would love to help your organization adapt, evolve and innovate your modernization initiatives. If you’re looking to discuss, strategize or implement any of these processes, reach out to [email protected] and reference this post.