Learn about Edge Computing and its impact on modern application infrastructure.

The rise of modern digital applications has been accompanied by a growing demand for faster and more efficient computing resources. While traditional cloud computing models have revolutionized the industry, they are increasingly proving insufficient to meet the needs of modern applications. Edge computing has emerged as a new paradigm that redistributes computational resources and data storage to the network periphery, resulting in unparalleled efficiency and responsiveness.

Edge computing is not just an evolution of technology, but a revolutionary approach that is transforming the digital landscape and empowering applications to achieve new levels of efficiency and responsiveness. This article aims to provide a comprehensive understanding of edge computing, including its foundational principles, benefits, and real-world examples that showcase its transformative impact across various industries.

We will explore the technical details of edge computing, exploring its architecture and practical implementation. We will also discuss the challenges associated with edge computing and the innovative solutions shaping its future. Furthermore, we will compare edge and cloud computing, highlighting scenarios where each excels, and discuss emerging trends in edge computing and their implications for the future of technology.

By the end of this article, you will gain a comprehensive understanding of how edge computing is reshaping the digital landscape and empowering applications to reach new standards of efficiency and responsiveness.

Understanding Edge Computing

Edge computing is a distributed computing paradigm that aims to improve response times and save bandwidth by bringing computation and data storage closer to the sources of data. It is a fundamental shift from traditional cloud computing, which relies on centralized data processing and storage. In edge computing, data is processed and stored as close as possible to its origin, reducing latency and optimizing user experiences.

Difference between Egde Computing and Traditional Cloud Computing:

- Decentralization: Edge computing moves computing resources from clouds and data centers as close as possible to the data source, leveraging physical proximity to the end-user. This approach reduces latency and improves response times, making it more suitable for real-time applications.

- Data Processing: In edge computing, data is processed locally, often on the edge device itself, before being transmitted to the cloud for further processing. This reduces the amount of data sent over the network, saving bandwidth and optimizing connectivity.

- Data Sovereignty: By processing data closer to its source, edge computing ensures data sovereignty and reduces the risk of data loss due to network constraints.

Decentralization is crucial in processing and storage because it results in reduced latency, faster response times and improved user experiences. It also optimizes cost by reducing the consumption of significant bandwidth and resources when transmitting large amounts of data. Decentralization allows for scalable solutions, adapting to the growing needs of businesses and industries. Edge computing is considered more secure than cloud computing because there is no need to transfer data, reducing the risk of data loss.

By understanding these fundamental principles and the differences between edge and traditional cloud computing, you can grasp the potential of edge computing to revolutionize modern applications and reshape the digital landscape.

Edge Computing vs. Cloud Computing:

Edge computing and cloud computing are two distinct models of computing that differ in their approach to data processing and storage. Edge computing involves processing data at or near the source of data creation, such as a sensor or device, reducing latency and improving response times. It is ideal for scenarios where data needs to be processed quickly, such as in remote locations with limited or no connectivity to a centralized location, where delays in decision-making processes can result in significant losses.

On the other hand, cloud computing relies on centralized servers stored in large-scale data centers and is preferred for non-time-sensitive data. This model is significant in making the best possible choices for IoT devices, where large amounts of data are generated and require analysis and storage.

While edge and cloud computing are not interchangeable technologies, they can complement each other in a hybrid model. In this model, critical tasks demanding real-time processing can be offloaded to edge devices, while less time-sensitive data processing occurs in the cloud. This harmonious collaboration ensures a balanced allocation of resources, optimizing both latency-sensitive and non-time-sensitive aspects of an application.

As technology advances, the line between edge and cloud computing continues to blur, giving rise to innovative architectures that leverage the strengths of both paradigms. It is crucial for organizations to understand the differences between these two paradigms to make informed decisions about their computing infrastructure and ensure that they are using the right model for their specific requirements.

Edge Computing Architecture

Edge computing architecture is a decentralized model that redistributes computational power closer to the data source. This approach minimizes latency and optimizes processing efficiency, resulting in improved outcomes and reduced costs in various industries.

The architecture involves an ecosystem of infrastructure components that have been dispersed from centralized data centers. These components include compute and storage capabilities, applications, and sensors. Edge devices and sensors play a critical role in collecting and processing information. They provide sufficient bandwidth, memory, processing power, and performance to execute data in real time without assistance from the rest of the network.

Core Components of Edge Computing Architecture

- Edge Devices and Sensors: These are the endpoints where data is collected, processed, or both, providing the necessary resources to execute data in real-time without assistance from the rest of the network.

- On-Premises Edge Servers or Data Centers: Deployed at the edge with network connectivity back to a central data center or cloud, allowing for processing and storage closer to the data source.

- Applications and Data Platforms: Deployed at the edge, enabling real-time processing, analysis, and decision-making at the edge of the network, leading to improved outcomes and reduced costs in various industries.

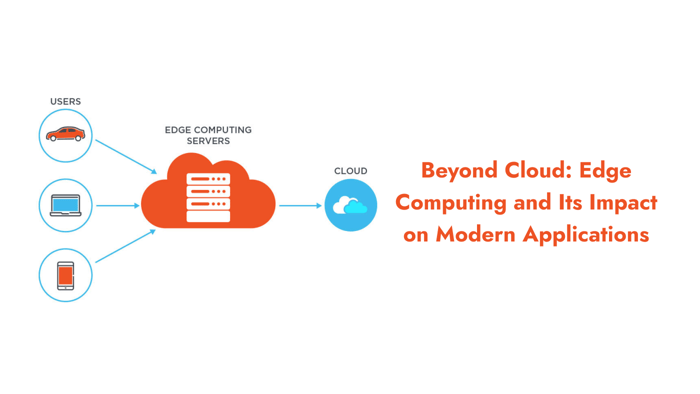

Here is an illustration of Edge Computing architecture:

The image above shows different layers of an Edge computing system. The top layer consists of cloud data centers, including a central data center and regional data centers. These centers are crucial in an Edge computing system because they hold all the information. However, local applications do not require them as heavily.

The next layer is the "edge" layer and can be represented in various scenarios like a restaurant, retail shop, oil platform, aeroplane or mobile medical clinic. The edge layer has edge data centers and IoT gateways connected through a local network, which could be fiber, wireless, 5G, or 4G.

Edge devices include smartphones, tablets, laptops, and IoT devices. These devices communicate with the edge data center, and there is also inter-device communication through a private network like RF or Bluetooth.

Despite the illustration showing only one edge data center, a business ecosystem could have many edge data centers. For example, a chain of retail stores might have edge data centers in each city with stores to power Point of Sale (POS) systems.

This architecture emphasizes the significant role of both cloud data centers and edge data centers in the overall computing framework. It clarifies the relationship between different layers and devices in a straightforward way that is accessible to people with limited technical knowledge.

Impact of Edge Computing on Modern Applications

Edge computing is revolutionizing modern applications by enhancing their performance, reducing latency, and optimizing user experiences. By processing data closer to its source, edge computing reduces the time it takes for data to travel to the cloud and back, resulting in faster response times and improved user experiences.

Real-world examples of modern applications benefiting from edge computing include:

- Healthcare: Edge computing enables real-time processing, analysis, and decision-making at the edge of the network, leading to improved patient outcomes and reduced healthcare costs.

- Transportation: Edge computing is being used to process data from IoT sensors in real time, enabling transportation companies to optimize routes, reduce fuel consumption, and improve safety.

- Manufacturing: Edge computing is being used to optimize production lines and improve quality control by processing data from IoT sensors in real time.

Edge computing's impact on modern applications goes beyond just improving performance and reducing latency. It also enables new possibilities for innovation, enhances data privacy and security, and reduces costs. By processing data closer to its source, edge computing reduces the amount of data sent over the network, saving bandwidth and optimizing connectivity.

In addition to these benefits, edge computing also offers a more scalable solution, adapting to the growing needs of businesses and industries. By leveraging physical proximity to the end-user, edge computing ensures data sovereignty and reduces the risk of data loss due to network constraints. As we continue to explore its potential, we can expect to see even more innovative applications and industries benefiting from this revolutionary approach to computing.

Challenges Facing Edge Computing and Solutions

The adoption of edge computing poses a variety of challenges for organizations, covering aspects such as network connectivity, security, scalability, data management, and device deployment. These challenges can impede the full realization of the potential advantages of edge computing if not adequately tackled. However, there are various solutions and best practices available to overcome these obstacles, guaranteeing the secure, efficient, and reliable implementation of edge computing solutions.

- Connectivity and Network Constraints: Ensuring reliable connectivity is crucial, especially in remote locations where access to centralized networks is limited, which can result in network bandwidth constraints and intermittent connectivity issues. One way to address this is by implementing fog computing and integrating with the cloud, which can transfer intensive processing tasks to more centralized locations, allowing edge devices to focus on critical local computations. This approach can lead to more efficient network utilization and improve the reliability of edge devices.

- Security and Data Privacy: Edge computing presents security and privacy challenges due to the distributed nature of edge devices, which increases the attack surface and potential vulnerabilities. To ensure the protection of sensitive data at the edge, robust security measures such as encryption, authentication protocols, and access control are necessary. Edge management platforms and automation tools can help in device provisioning and software deployment, thereby minimizing security risks.

- Scalability and Resource Management: Scalable solutions in edge computing pose challenges in managing highly distributed environments and the complexity associated with the deployment and management of edge infrastructure. Standardized operating system configurations and cluster orchestration are essential in managing distributed environments effectively. Automation tools simplify device provisioning and streamline operations, offering a solution to the scalability and resource management challenges.

- Data Synchronization and Consistency: Maintaining data synchronization and consistency across distributed edge environments is a significant challenge that requires attention. Standardized operating system configurations and cluster orchestration play a vital role in ensuring data synchronization and consistency in edge computing environments. These measures streamline operations across the distributed landscape, making them more efficient and effective.

- Edge Device Management and Monitoring: Managing and monitoring edge devices, such as those used in edge computing, can be challenging due to the need for remote device management, software updates, and application deployment. However, the use of edge management platforms and automation tools can simplify the process of device provisioning, software deployment, and remote management. These tools can help to streamline the complexities associated with managing edge computing infrastructure and devices.

- Evolving Security Measures in Edge Computing: As edge computing becomes more prevalent, it also introduces unique challenges in maintaining privacy and security measures against evolving cyber threats. To address these challenges, it is essential to continuously adapt to emerging security standards, implement encryption and authentication protocols, and encrypt data to ensure efficient and secure processing and protection of data at the edge.

Organizations can overcome the challenges of edge computing by adopting practical solutions and best practices. This will help in creating efficient, secure, and reliable deployments that enhance performance and improve user experiences. It is important to stay updated with the ever-evolving security landscape to maintain the integrity of edge computing implementations in the face of constantly changing cyber threats.

Conclusion

In conclusion, Edge computing is a technology that is reshaping the digital landscape and enabling applications to achieve higher efficiency and responsiveness. By processing data closer to its source, edge computing reduces the time it takes for data to travel to the cloud and back, resulting in faster response times and improved user experiences.

In this article, we explored the foundational principles of edge computing, its benefits, and real-world examples showcasing its impact across various industries. It also discusses the challenges associated with implementing edge computing and the innovative solutions shaping its future. Additionally, it compares edge and cloud computing, highlighting scenarios where each excels, and discusses emerging trends in edge computing and their implications for the future of technology.

As we continue to explore the potential of edge computing, we can expect to see even more innovative applications and industries benefiting from this approach to computing. The impact of edge computing on modern applications will undoubtedly leave a lasting mark on the digital landscape, and its evolution will continue to shape the future of technology.

Akava would love to help your organization adapt, evolve and innovate your modernization initiatives. If you’re looking to discuss, strategize or implement any of these processes, reach out to [email protected] and reference this post.