Table of Contents

A comprehensive guide to next-generation compute architectures surpassing VMs and containers for scalable, resilient infrastructure.

For over ten years, virtual machines (VMs) and containers have been the backbone of modern infrastructure. They’ve allowed developers to work their magic by separating software from the underlying hardware, creating flexible environments where applications can thrive, regardless of where they’re hosted. A VM acts like a full virtual computer, enabling multiple applications or even different operating systems to run on a single device. On the other hand, containers focus on the application itself, bundling just the code and its required libraries, which makes them lightweight, fast, and easy to move around.

These two technologies have played a critical role in the growth of cloud-native applications, continuous integration and delivery (CI/CD) pipelines, and microservices architectures. Many teams find value in using both containers for their portability and consistency, and VMs for their strong isolation and complete replication of environments. This hybrid approach has enabled organisations to scale and secure their applications while maintaining reliability across multiple platforms.

However, as the demands of infrastructure continue to evolve, these once-groundbreaking tools are starting to reveal some limitations. VMs can slow down the provisioning process and use up considerable resources, while containers can make things complicated when it comes to orchestration, security, and managing their lifecycle. For organizations aiming for high-speed performance, low-latency operations, and simpler workflows, the traditional solutions just aren’t cutting it anymore.

This article discussed what comes next after VMs and containers. We’ll explore the new computing architectures and principles that are reshaping scalability, resilience, and efficiency in this post-container world.

What are Virtual Machines?

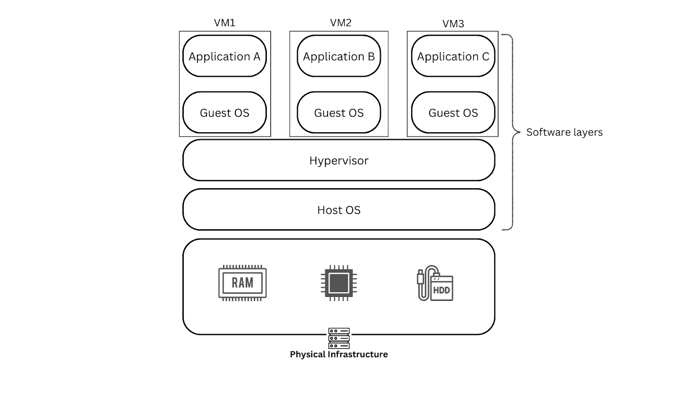

Virtual machines(VMs) are like software versions of regular computers. They provide fully separate environments where you can run different operating systems and applications, almost as if each VM is its own independent server. This ability makes VMs fundamental to cloud computing and IT in larger businesses, allowing various tasks to utilize the same physical hardware without interfering with one another. They help organizations maximize their resources while keeping everything organized and efficient.

At the core of the VM technology is the hypervisor, a clever piece of software that sits between the main computer (the host) and its virtual machines. Think of the hypervisor as a traffic director, managing how CPU, memory, storage, and network resources are shared among the VMs. This setup allows each virtual machine to operate like its own separate system. For organizations, this means they can run multiple workloads on fewer physical machines, saving on hardware costs. Plus, it offers the flexibility to easily create or retire virtual machines whenever needed, adapting to changing demands with ease.

There are two main types of hypervisors:

Type 1 hypervisors, also known as bare-metal hypervisors, operate directly on the hardware of the machine, skipping the need for a host operating system. This setup allows them to deliver impressive performance, robust security, and efficient resource management, making them a popular choice in enterprise data centers and among cloud service providers. Some well-known examples of this type of hypervisor include VMware ESXi, Microsoft Hyper-V, Xen, and KVM.

Type 2 (hosted) hypervisors are designed to run on top of your existing operating system, making them user-friendly and relatively easy to set up. Because of this, they're particularly popular for tasks like development, testing, or even desktop virtualization. Some well-known examples of these hypervisors are VMware Workstation and Oracle VirtualBox, both of which are great choices for users looking to create and manage virtual machines without a lot of hassle.

VMs are incredibly flexible and come with built-in security features. Each VM runs its own operating system, which allows you to host different environments on the same hardware. This makes them perfect for a variety of tasks, from software testing and multi-tenant hosting to disaster recovery and even running demanding applications like machine learning.

One of the great things about VMs is the ability to take snapshots and roll back changes. This provides a safety net for administrators, enabling them to recover easily from mistakes or even cyberattacks.

However, all this versatility does come with a downside. VMs typically consume more resources and take longer to set up compared to lighter options like containers. Despite this, they have been crucial in shaping the landscape of modern cloud computing.

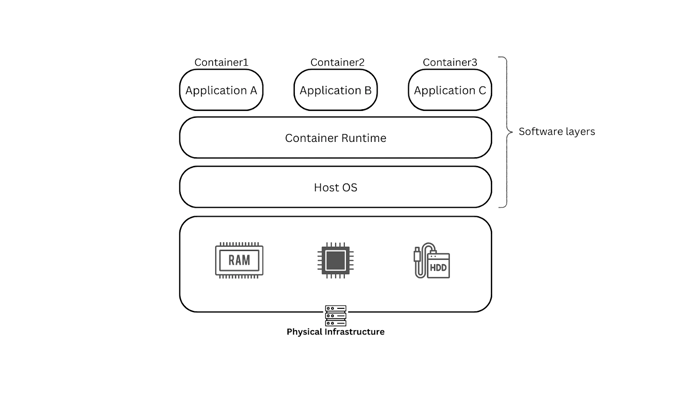

What are Containers?

Containers are like portable boxes for software that include everything an application needs to run smoothly. This means the code, any necessary runtimes, system libraries, and even configuration settings. Unlike virtual machines that replicate entire operating systems, containers work by sharing the host computer's core system. This clever design allows them to operate at the operating system level, making them faster to start up, smaller in size, and much more efficient in terms of resources compared to virtual machines.

One of the standout features of containers is their portability. When you package an application along with all its necessary components into a container, it can run consistently no matter where you deploy it, whether that's on a developer's laptop, in a cloud data center, or even on devices at the edge of a network. This idea of "build once, run anywhere" has become a game-changer in how we deliver software today, especially with the rise of microservices.

Most of the containers we work with today are designed to run a single service or process in isolation. They are created from templates called images, which are read-only and can be stored in registries to be accessed whenever needed. Docker has been a key player in popularizing this container model, but there are also alternatives like Podman and Buildah. For those managing larger environments, orchestration platforms like Kubernetes come into play. They help in deploying, scaling, and managing groups of containers efficiently, offering features like rolling updates, load balancing, and self-healing to ensure everything runs smoothly.

To ensure interoperability across platforms, containers follow specifications from the Open Container Initiative (OCI):

Image-spec – defines the physical structure of container images

Runtime-spec – defines how containers are executed in different runtimes

Distribution-spec – standardizes APIs for sharing and distributing container images

Containers are a great technology because they run directly on the host operating system, which means they use fewer resources compared to virtual machines. However, using containers does require some attention to security. It's important to follow best practices like checking base images for vulnerabilities, applying strict security policies while the container is running, and keeping networks well-segmented to protect workloads.

Thanks to their speed, efficiency, and portability, containers have quickly become the go-to choice for deploying cloud-native applications. That said, just like VMs, they have their own set of limitations that users should keep in mind.

Comparison: VMs and Containers

Virtual machines and containers are both tools that help developers run applications without worrying too much about the underlying hardware and operating systems. Essentially, they create separate spaces where applications can run smoothly, along with everything they need to function properly. This separation helps avoid conflicts and makes sure that applications behave consistently, regardless of where they’re deployed. However, the way they go about achieving this isolation is quite different, and each has its own set of advantages and downsides.

Both VMs and containers enable the following:

Isolation — Applications run independently from one another, reducing the risk of conflicts.

Portability — Software can be packaged as an image and deployed consistently across environments.

Scalability — Multiple instances can be run simultaneously to handle fluctuating workloads.

Manageability — Both can be automated using orchestration and configuration tools.

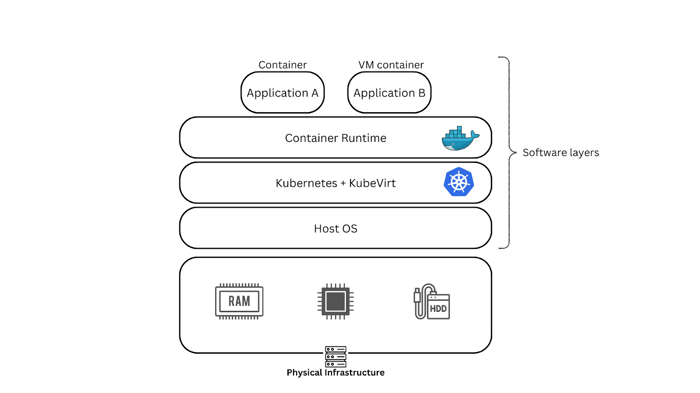

Containers and virtual machines often work hand in hand, combining their strengths for efficient computing. By running containers inside VMs, you get the best of both worlds: the easy portability that containers offer and the strong isolation that virtualization provides.

What sets VMs and containers apart is how they’re built. VMs create a virtualized version of an entire computer system, complete with its own operating system. In contrast, containers operate at the operating system level, sharing the host's kernel. This key difference affects how they perform, how efficient they are, and when it’s best to use each one.

Feature | Virtual Machines | Containers |

OS virtualization | Full OS per VM (guest OS + host OS) | Share host OS kernel |

Resource usage | Heavy — several GBs per instance | Lightweight — typically MBs |

Startup time | Slower — full OS boot required | Near-instant (seconds) |

Isolation | Strong isolation, secure boundaries | Lighter isolation, kernel is shared |

Best suited for | Legacy apps, multi-OS environments, high-security workloads | Microservices, CI/CD pipelines, cloud-native apps |

Practical implications

Performance and efficiency: Containers typically consume fewer resources and start up in seconds, making them more efficient for dynamic, cloud-native applications. VMs carry the overhead of a full OS but may outperform containers in certain scenarios, such as memory bandwidth or highly isolated workloads.

Scalability: Containers scale rapidly and work well in microservices environments. VMs scale more slowly and require more storage, but remain reliable for predictable, steady workloads.

Management: Container orchestration with tools like Kubernetes automates scaling, service discovery, and resilience. VMs, by contrast, are often managed with traditional configuration management or infrastructure-as-code tools.

Category | Virtual Machines | Containers |

Isolation and security | Strong isolation; each VM has its own OS. Hypervisors, however, can be a target for attacks. | Flexible isolation; relies on a shared OS kernel, making security more dependent on configuration. |

Resource utilization | Higher consumption due to OS overhead. | Highly efficient — only packages application dependencies. |

Deployment | Slower, better for stable and legacy apps. | Faster, optimized for frequent builds and microservices. |

Orchestration | Managed via configuration and provisioning (e.g., Terraform, VMware tools). | Automated with Kubernetes, Docker Swarm, Nomad. |

Which should you use?

The choice between containers and VMs depends largely on your application needs:

Use containers when:

Building cloud-native applications or microservices.

Prioritizing fast development cycles with CI/CD.

Running scalable workloads that need rapid provisioning.

Use VMs when:

Running legacy or monolithic applications.

Hosting workloads requiring high security and strong isolation.

Running multiple operating systems on the same hardware.

In many organizations, the right answer is not either/or but both. Containers can run inside VMs to leverage existing infrastructure and security controls, while VMs continue to serve workloads that containers cannot efficiently handle.

This hybrid model reflects the reality of today’s IT environments: each technology has a role, and knowing when to use which ensures optimal performance, security, and cost efficiency.

Emerging Compute Paradigms

As the infrastructure needs continue to change, we're moving past the traditional limits of virtual machines and containers. A new wave of computing methods is emerging that’s transforming the way we build and deploy applications. These innovative models focus on being faster, more portable, and more efficient, all while tackling the scalability and complexity issues that previous approaches faced.

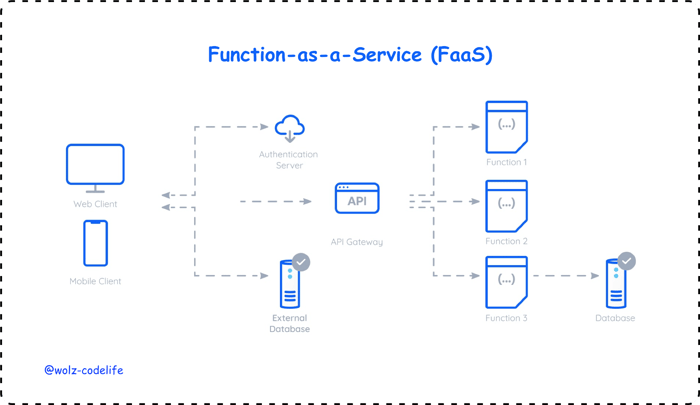

Serverless computing, or Function-as-a-Service (FaaS)

Serverless computing, often known as Function-as-a-Service (FaaS), basically lets developers focus on what they do best without worrying about the underlying servers. Instead of managing hardware, they just write small, event-driven functions that run whenever needed. The cloud provider takes care of everything else—like provisioning and scaling—automatically.

This approach is especially great for businesses with unpredictable workloads because they only pay for the computing power they actually use. Plus, it speeds up development since teams can direct their energy towards building the application itself rather than fiddling with infrastructure.

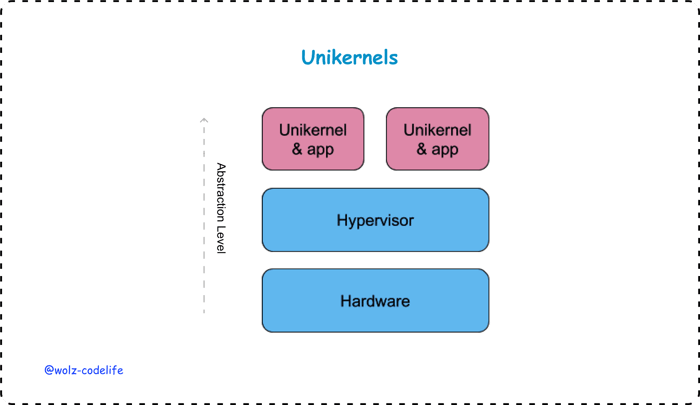

Unikernels

Unikernels are an exciting development in the world of computing. They streamline the process by compiling applications along with only the essential parts of an operating system needed to support them. This results in compact machine images designed for specific purposes. One of the biggest advantages is how quickly they can boot up, often in just milliseconds. They also use much less in terms of resources compared to traditional virtual machines (VMs) and offer strong isolation, which is great for security.

Their sleek design makes them appealing for performance-sensitive tasks, Internet of Things (IoT) devices, and secure environments. However, they haven't yet reached widespread adoption like containers or VMs. That's largely since the tools and ecosystem surrounding unikernels aren't as developed yet.

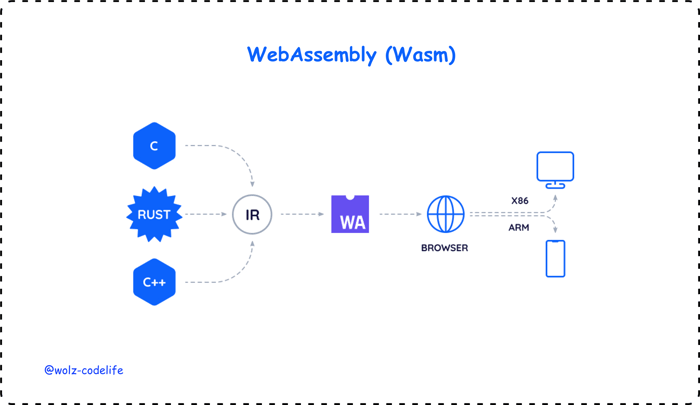

WebAssembly (Wasm)

WebAssembly (Wasm) is becoming a popular choice in cloud computing, thanks to its ability to run code securely and portably. Originally created for browsers, Wasm is now making its way into server-side and edge computing. One of its biggest advantages is that it can deliver near-native performance on various platforms, which makes it a great fit for things like distributed applications, microservices, and edge workloads.

Wasm modules are small, isolated, and easily transferable, making them a strong alternative for situations where speed and security really matter.

Overall, these trends suggest a future where computing is more flexible and efficient, moving away from conventional methods like virtual machines and containers, and toward models that are better suited for a highly distributed, cloud-native environment.

Distributed and Edge Architectures

As applications become more latency-sensitive and data-intensive, traditional centralized cloud models struggle to keep up. To tackle this issue, distributed and edge architectures are emerging as critical approaches for delivering faster, more resilient experiences.

Edge computing

Edge computing is all about bringing computation closer to where it's actually needed. Instead of sending all data back to a centralized server far away, it processes information right at the source, whether that's a mobile device, an IoT sensor, or even a local edge server. This approach significantly cuts down on the distance data has to travel, which means lower latency and quicker responses. This immediacy is crucial for things like self-driving cars, smart factories, and engaging experiences in augmented or virtual reality. By shortening the data journey, we can create smoother and more interactive technologies that enhance our everyday lives.

Fog computing

Fog computing takes the concept of traditional cloud computing and adds a layer of versatility by acting as a connector between centralized clouds and various edge devices. Instead of just depending on a distant cloud server or merely the devices we use, fog computing spreads out processing tasks across different levels of the network. This approach is especially useful for applications that need quick responses and coordination, like managing smart city systems or monitoring health in real-time.

On a larger scale, this decentralized way of managing data makes our systems more resilient since we’re not putting all our eggs in one basket by relying on a single data center. It also helps meet regulations around data residency by keeping sensitive data within specific regions or local areas.

Overall, the combination of edge and fog computing represents a significant shift towards infrastructure that is not only scalable but also adaptable to different locations. This means we can better meet people's needs with the right balance of speed, security, and control.

Composable Infrastructure

Composable infrastructure is an innovative approach to managing IT resources. Instead of locking down physical servers, storage units, and networking equipment to specific tasks, it treats them as flexible pools. This means that teams can easily mix and match resources according to their needs, just like putting together a custom dish from a buffet, all done through software.

Imagine your IT team working on machine learning projects. They can quickly request the exact setup they need, a supercharged configuration with powerful GPU nodes and fast storage that’s perfect for training deep neural networks. And the best part? They don't have to wait around; these resources are provisioned in just minutes. Once the training is over, those very same resources can be reassigned for another task, like running a high-speed trading application that demands incredibly swift computing power. In short, composable infrastructure combines the best of both worlds—offering the robust performance of dedicated hardware with the flexibility and dynamism often associated with cloud environments.

Practical examples include:

Finance: Investment banks and hedge funds use composable infrastructure to spin up high-performance compute clusters for risk modeling or algorithmic trading, then tear them down when market windows close.

AI/ML: Data scientists can dynamically allocate GPUs, CPUs, and storage depending on whether they are training, validating, or serving models — ensuring hardware utilization stays high.

Analytics: Enterprises analyzing large datasets can flex resources up during peak batch jobs and release them afterwards, avoiding underutilized hardware.

Vendors such as HPE Synergy, Dell EMC PowerEdge MX, and some newer startups are making it easier for companies to adopt composable systems. These systems use API-driven provisioning, which allows for seamless integration with DevOps pipelines.

Composable infrastructure is an appealing option for organizations looking to balance the reliability of on-premises infrastructure with the flexibility of the cloud. This powerful combination meets the demands for both high performance and adaptability in today’s fast-paced environment.

Migration Strategies and Challenges

Transitioning from traditional virtual machines and containers to new computing models isn’t usually something you can just dive into headfirst. Most organizations take their time with this shift, gradually incorporating new methods alongside their existing systems. This step-by-step approach lets teams explore innovative options like serverless functions, edge computing, and composable systems without interrupting the essential applications that keep their businesses running smoothly.

Successful migration requires more than just new tools, it demands a mindset shift. Developers, operators, and business leaders must align around modern practices such as infrastructure-as-code, event-driven design, and security-by-default. Training is critical, as teams need to understand not only how to use new platforms but also how to rethink architectures for efficiency and scalability.

Vendor ecosystems and interoperability also remain central concerns. With many emerging technologies still maturing, organizations risk lock-in or integration challenges if they commit too heavily to a single vendor too early. A balanced strategy often involves piloting workloads across multiple environments while prioritizing open standards.

Best practices

Start with pilot projects in non-critical workloads.

Provide hands-on training to build in-house expertise.

Leverage open standards and APIs to reduce vendor lock-in.

Align migration plans with business objectives (e.g., cost reduction, faster time-to-market).

Establish clear monitoring and security baselines early.

Challenges

Migrating to new systems can bring great benefits, but it's not always a smooth process. Many organizations face hurdles like outdated technology that’s hard to move away from, resistance to change within the team, and tools that aren’t fully developed yet. Additionally, it can be tough to justify the immediate costs of migration, especially when the return on investment relies on significantly increasing workloads. To tackle these challenges, it's crucial to have strong support from leadership, to implement gradual changes, and to continuously assess both the technical aspects and business outcomes throughout the process.

Conclusion

Virtual machines and containers have transformed how we think about infrastructure, making it easier to move applications around, scale them up when needed, and utilize our hardware more efficiently. However, as we push for lower latency, quicker setups, and even better resource usage, it’s becoming clear that these technologies are just stepping stones in the evolution of computing.

We’re starting to see new approaches pop up, like serverless functions, unikernels, WebAssembly, edge computing, and composable infrastructure. These innovations suggest that the future of computing is heading towards more flexible, distributed, and adaptive systems.

Instead of choosing one technology over another, it looks like we’ll be embracing a mix of them. Organizations will likely find themselves using virtual machines for legacy applications, containers for microservices, edge computing for workloads that need low latency, and serverless models for functions that demand quick, bursty processing—all managed through a cohesive strategy.

For companies that want to stay ahead, being adaptable is key. This means investing in training for their teams, supporting open standards, and being willing to test out new technologies as they emerge. The businesses that succeed will be the ones that don’t just hop on the latest trends but also weave these innovations into a well-thought-out strategy that balances performance, costs, security, and creativity. This way, they’ll be ready to thrive in a world where computing is more dynamic, distributed, and resilient than ever before.

Akava would love to help your organization adapt, evolve and innovate your modernization initiatives. If you’re looking to discuss, strategize or implement any of these processes, reach out to [email protected] and reference this post.