Table of Contents

A comprehensive guide to how principal engineers can adapt their leadership role in a multi-agent, AI-driven stack.

Software engineering is stepping into a transformative new era. This shift is less about the tools and frameworks we traditionally relied on and more about how we orchestrate complex systems. With the rise of AI-powered architectures, the way we build, test, and maintain software is changing dramatically. What started as basic code-generation assistants has grown into sophisticated multi-agent systems that can design, deploy, and manage entire software lifecycles. These systems function like a team of specialized agents working together, each with its own goals, much like a group of microservices.

We now have autonomous agents that can handle tasks such as committing pull requests, optimising databases, and troubleshooting issues, while orchestrators coordinate and keep things on track. As a result, the complexity of our work has shifted from managing infrastructure to managing intelligence itself.

The professionals who will thrive in this new environment aren’t just data scientists or prompt engineers; they’re the principal engineers. They have a deep understanding of how things can go wrong, how distributed systems can fail, and they know that reliability comes from thoughtful design, not just luck. As AI takes on more responsibilities in coding, testing, and decision-making, these principal engineers become crucial. They’re tasked with creating the guidelines, building in observability, and establishing trust boundaries to make sure that collaboration between humans and machines is safe and effective.

Leading in this age of multi-agent systems requires a different approach. It’s not about writing every line of code yourself; it’s about setting the principles, frameworks, and architectures that will help us navigate this AI-driven world in a way that is reliable, understandable, and ultimately trustworthy.

What Is an AI-Driven Stack?

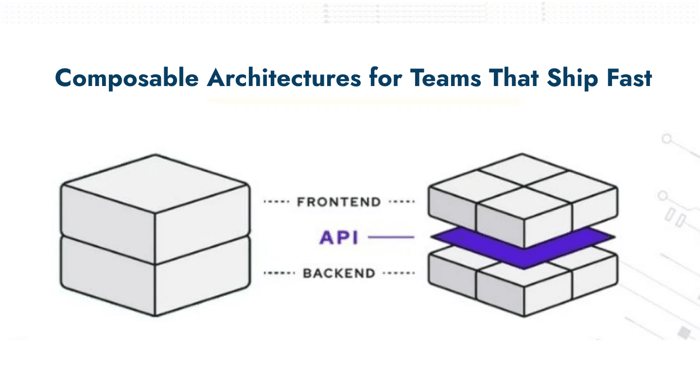

Modern software architecture is evolving from static, rules-based systems into dynamic ecosystems where intelligence is a first-class citizen. In traditional stacks, infrastructure served as a foundation for deterministic workloads: code deployed, requests routed, metrics logged, and pipelines maintained. But as organizations embed machine learning into every layer of their products, a new paradigm is emerging: the AI-driven stack.

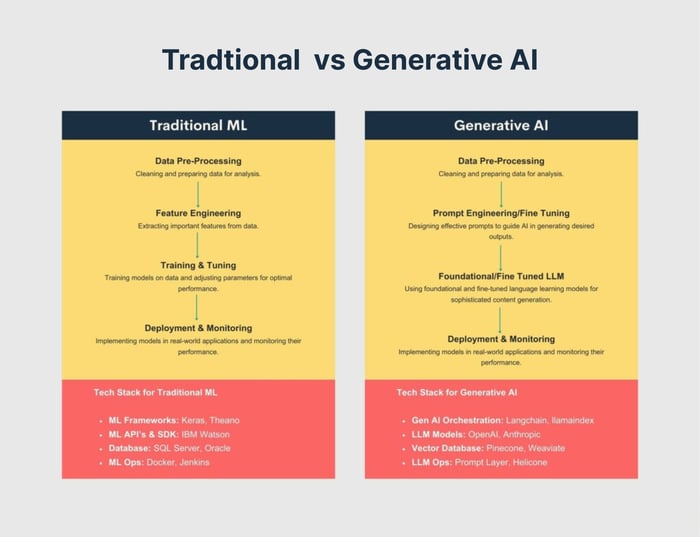

1.1 From Traditional to Generative-AI Architectures

Building a web application was pretty straightforward, relying on predictable interactions between APIs, databases, frontends, and CI/CD automation. Each layer worked in a reliable way, meaning that if you provided the same input, you would always get the same output. However, with the rise of AI-enhanced architectures, things have changed. Now, we have components like recommendation systems, generative tools, and even autonomous agents that think, learn, and adapt in real-time. Instead of just following instructions, these systems respond to their context, which makes applications much more dynamic. This evolution turns what used to be static processes into adaptable systems that continually learn and improve over time.

1.2 Defining Characteristics of an AI-Driven Stack

An AI-driven stack is defined by four interlocking layers:

Data Pipelines – Continuous ingestion, labeling, and transformation of diverse data streams that fuel model accuracy and retraining.

Model Orchestration – The coordination of multiple models and agents (LLMs, domain-specific models, embeddings) through frameworks like Ray, LangChain, or Hugging Face Transformers.

Intelligent Services – APIs and microservices augmented with AI reasoning from predictive analytics to generative content creation embedded into business workflows.

Feedback Loops – Monitoring and reinforcement systems that capture user behavior, evaluate outcomes, and fine-tune performance over time.

Together, these layers enable a self-optimizing ecosystem, one where software doesn’t just execute logic but learns from its environment, making every release smarter than the last.

How Multi-Agent AI Stacks Work

Multi-agent AI systems are built to reflect the way humans collaborate in teams. Just like specialists with different skills come together to tackle complex challenges, these systems use several agents, each with its own expertise. Instead of having one large model trying to handle everything, they coordinate, compete, or cooperate through organized methods to find the best solutions. It’s all about harnessing the strengths of each agent to work toward a common goal.

2.1 Multi-Agent Concept

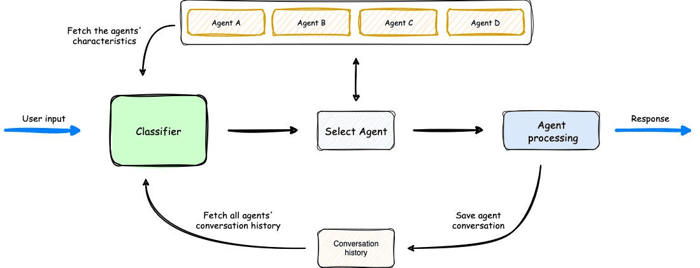

In a typical multi-agent setup, there’s usually a central coordinator, like a friendly gatekeeper, that helps manage the flow of requests. Imagine you’ve got a question or need some support when you reach out, this coordinator takes a moment to assess your input and decides which expert agent is the best fit to help you. Each agent has its own unique skills: for example, Agent A might be a whiz at generating code, Agent B excels at creating summaries, while Agent C is your go-to for retrieving data.

Instead of having all the agents work on your request at the same time, the coordinator skillfully directs tasks, pulling in relevant background information to provide context and selecting the most appropriate agent for the job. This setup creates a vibe similar to a collaborative team rather than a single assistant; each agent operates independently but works together towards a common goal, making the entire experience feel much more cohesive and efficient for you.

2.2 Core Components

The architecture shown above illustrates the core elements of a multi-agent stack:

Classifier (Input Router): Interprets the user request, referencing agent metadata and historical interactions to route queries accurately.

Agent Pool: A set of specialized agents, each with its own skills, tools, and context. Agents can collaborate by sharing insights or verifying each other’s outputs.

Agent Selection Logic: Chooses the best-fit agent or dynamically assembles multiple agents to handle complex queries.

Agent Processing: Executes the assigned task, reasoning, retrieving data, or generating output before sending the response.

Conversation History Layer: Stores past interactions and outcomes, allowing agents to learn over time, maintain context, and improve decisions.

This orchestration pattern transforms AI from isolated models into adaptive ecosystems, where specialized agents coordinate seamlessly to deliver fast, context-aware responses at scale.

The Principal Engineer’s Role in the Multi-Agent Era

The role of the principal engineer is evolving in exciting ways. It's no longer just about designing systems and reviewing code; now, they are becoming key figures who bridge the gap between cutting-edge AI technology and the essential principles of reliability, governance, and ethics in the enterprise world. As AI systems start to play a bigger role in creating, deploying, and even managing software, the focus is shifting. It's not a matter of whether engineers should collaborate with AI, it's about how they can take the lead in this new landscape.

3.1 Architectural Leadership

Principal engineers play a crucial role as the architects behind robust, modular AI systems. Their main responsibility is to ensure that all the components, like planners, validators, retrievers, and executors, work seamlessly together in a way that allows the system to grow and adapt without falling apart under its own complexity. This involves creating reliable coordination layers that can handle faults, establishing clear standards for tracking the reasoning behind decisions, and building in feedback loops that help the system learn from its mistakes. A successful AI architecture isn’t just about being independent; it’s about being able to adapt and grow intelligently while still being easy to test and secure.

3.2 Technical Governance

Principal engineers play a crucial role in shaping the guidelines that help ensure our AI interactions meet our organization's standards. They focus on creating design patterns that everyone can use, ensuring that different frameworks and APIs work well together, and avoiding chaos by keeping track of model versions and evaluations in one place. Much like how CI/CD pipelines have streamlined the deployment process, we can expect AI governance pipelines to become the norm for getting models approved, conducting thorough tests, and ensuring compliance.

3.3 Mentorship and Influence

Principal engineers do more than just work on technical systems; they really shape the engineering culture within their teams. They take the time to mentor others on how to use AI responsibly, guiding developers on how to assess the output from models, understand the reasoning behind decisions, and manage any uncertainties that arise. The focus shifts from simply asking, “Can we automate this?” to a more thoughtful, “Should we trust this?” Their role is crucial in helping everyone embed ethical considerations and ensure that decisions are understandable at every stage of development.

3.4 Acting as Translator

Principal engineers play a crucial role in bridging the gap between business goals and what can realistically be achieved with technology. They take strategic ideas like reducing costs, speeding up release cycles, and improving decision-making and translate them into actionable architecture that can be measured and implemented. They are the ones who help leaders grasp what is doable and guide developers in making responsible choices.

In many ways, a principal engineer takes on the role of a chief systems thinker in an organization embracing AI. They’re not just architects; they’re also mentors and ethical guides. In a landscape where code increasingly generates itself, their true value comes from shaping how humans and machines can collaborate intelligently and effectively.

Challenges and Risks

The excitement surrounding multi-agent AI systems brings along a set of engineering challenges that go beyond just technical know-how. These difficulties require a level of organizational growth and maturity. As these agents become more autonomous and the ways they coordinate with each other grow in complexity, lead engineers must find a balance. They need to foster innovation while ensuring safety, clarity, and trustworthiness in their systems.

4.1 Complexity and Emergent Behavior

Multi-agent systems are complex and inherently nonlinear. Each agent interacts with others in ways that affect their behavior, leading to feedback loops that can produce surprising outcomes. When it comes to debugging, it's less about following lines of code and more about understanding the dynamics of these interactions. You have to figure out why an agent made a specific decision or how two lines of reasoning took different paths. Unfortunately, traditional monitoring tools often fall short in this regard. Engineers are in need of observability systems that can capture the reasoning processes, decision-making scores, and conversations between agents. Without this kind of insight, what starts as innovative behavior can quickly spiral into instability.

4.2 Data Governance and Security

As we see agents interacting and sharing data, prompts, and model outputs, it opens up new avenues for potential risks. Sensitive information like customer data, proprietary code, and internal documents could accidentally get mixed up between agents or even leak through external APIs. To tackle this challenge, it’s essential for principal engineers to set up robust data isolation policies and ensure encryption is applied at all levels. They also need to weave in detailed access controls within the orchestration systems. Security practices should move away from just static role-based models to more context-aware approaches. This way, each agent has access only to what it genuinely needs for its current task, minimizing risks and enhancing safety.

4.3 Bias and Ethical Concerns

As we develop AI systems, it's important to recognize that these systems can pick up biases from the historical data they learn from. This is especially concerning in collaborative environments, where feedback loops can magnify those biases even more. That's why the role of principal engineers is so vital. They need to act as guardians of responsible AI, ensuring that fairness checks, model transparency, and ongoing validation are woven into the fabric of the engineering process. Ultimately, we want ethical behavior to be an integral part of our systems from the start, rather than something we try to address after the fact.

4.4 Organizational Resistance

No matter how great an AI setup is, it can still fall short if the teams don’t embrace it. Sometimes, engineers hesitate to rely on autonomous systems, while managers might worry about losing control. Lead engineers must step in and bridge this divide. They need to show the real benefits through clear results, foster an environment where team members feel safe to try new ideas, and create straightforward ways to handle situations when things don’t go as planned.

In the end, the real challenge isn’t just about creating effective systems; it’s about building systems we can trust and developing organizations that know how to guide them thoughtfully.

Strategies for Leading Successfully

To thrive in today's world filled with multiple intelligent agents, we need to shift our mindset beyond just technical expertise. Principal engineers play a crucial role in building environments where both autonomous systems and human teams can grow and adapt together in a safe and effective way. Instead of trying to manage every single decision that an AI makes, the focus should be on creating guidelines and incentives that encourage teamwork and enhance our collective intelligence. The aim is to promote collaboration and transparency, allowing everyone, humans and machines alike, to contribute to a shared vision.

5.1 Adopt Product Thinking for AI Systems

When we think about an AI agent, it’s important to see it as more than just a model it's a complete product that serves real users and provides value. For principal engineers, success should be measured through tangible outcomes like reducing latency, cutting costs, preventing errors, or creating new revenue opportunities. Approaching AI with a product mindset means we treat each workflow as a feature that can be updated and improved continuously based on user feedback. This approach helps us connect the innovative spirit of experimentation with the stability and reliability needed in a business setting.

5.2 Build Guardrails, Not Gates

Innovation flourishes when teams can experiment freely and learn from failures without facing dire consequences. Rather than bogging down creativity with endless review processes or strict approvals, principal engineers can set up supportive frameworks like policy-as-code, safe testing environments, and easy rollback options. These tools create a space where teams can explore new ideas while staying within safe boundaries, ensuring they remain compliant and in control. It’s all about allowing freedom to innovate while keeping risks manageable.

5.3 Invest in Observability and Feedback Loops

It’s not enough to just track performance metrics; we need to capture the thought processes behind decisions, keep a record of interactions, and monitor communications between different models. By establishing ongoing feedback loops, we empower our systems to learn and improve themselves, while also giving engineers a clearer picture of any emerging issues before they turn into serious problems. Lead engineers need to advocate for comprehensive observability tools that connect our technical efforts directly to the goals and outcomes of the business.

5.4 Cultivate Cross-Functional Collaboration

The success of multi-agent systems really hinges on the ability to communicate across different fields. Principal engineers must bridge the gap between data science, machine learning, and product teams. They play a key role in making sure that our AI solutions not only meet performance goals but also adhere to ethical standards and align with business needs.

In today’s landscape, effective leadership means blending technical vision with a genuine understanding of people’s needs. We need to build systems that are not only smart but also responsible and easy to understand. The best principal engineers will approach every new AI feature as an opportunity to innovate in design and to lead by example.

Conclusion

As we embrace AI-driven systems and multi-agent architectures, how they lead will greatly influence whether this change brings about innovation or confusion.

Their goal is to strike a careful balance between fostering creativity and ensuring safety. They want to encourage new ideas while keeping systems reliable and trustworthy. By focusing on smart design, handling data responsibly, and collaborating across various teams, principal engineers can leverage the complexities of AI to create real value.

Those who can blend human insight with machine capabilities will not only influence the future of engineering but will also redefine what it truly means to create with intelligence.

Akava would love to help your organization adapt, evolve and innovate your modernization initiatives. If you’re looking to discuss, strategize or implement any of these processes, reach out to [email protected] and reference this post.