When you set out to build a new software product, chances are, you’ll sooner or later implement an API. Either to connect your frontend with the backend, to connect your services, or to let your users integrate your product with their applications. An API is the entry point to your service, and the Web, as we know it today, wouldn’t exist without them.

With the rise of serverless technology, creating an API has become easier than ever. All that setup and maintenance of VMs is gone; you just write a function, deploy it to the cloud provider of your choice, and are good to go.

But not all is rosy with that newfound power.

The proliferation of many small serverless APIs can lead to an increased number of integration points around your systems. While the monolithic apps of the past profited from end-to-end typing, these new microservices, with different code bases, make it harder to get a backend type to the frontend. Since developers can’t replicate serverless architectures 100% on their local machine, debugging is more challenging, and the need for typing is even more significant.

Luckily, some nifty developers set out to solve this issue. Several typed APIs exist, including GraphQL, OpenAPI, gRPC, tRPC, and many more.

This article will examine tRPC, a framework that allows you to build end-to-end typed APIs with TypeScript without code generation. We’ll check out the basics of tRPC and implement a serverless API on Cloudflare Workers.

What is tRPC?

tRPC is a TypeScript API framework that helps you build typed remote procedure call (RPC) APIs. You define your endpoints with a validator that creates static types and import these types into your frontend. Since TypeScript 3.8, importing only types is possible, so no runtime code is imported that could end up in your client code.

Take this code, for example:

// server.ts

export class Router {}

const router = new Router()

// client.ts

import { Router } from "./server"

function useRouter(router: Router) {

...

}

Here, we export the class directly and import it to the client so that the useRouter function can type its argument correctly. This would add the whole Router class and its dependencies to the client code.

The example that only exports the types:

// server.ts

class Router {

route = "/"

}

const router = new Router()

export type AppRouter = typeof router

// client.ts

import type { AppRouter } from "./server"

function useRouter(router: AppRouter) {

...

}

Here we only export the types of the router object and not its full implementation. The useRouter function can profit from the server types, but no server code will end up in the compiled JavaScript.

So, to make it short: tRPC is an API framework that leverages TypeScript’s type exports to provide end-to-end typing without code generation.

Now that we cleared that up let’s build something with tRPC!

We will build a simple API for a blog that will allow creating, reading, updating, and deleting articles and comments. We will use tRPC and deploy it as a Cloudflare Worker.

Prerequisites

You’ll need Node.js and a Cloudflare account to deploy the API.

Initializing the Project

First, we have to initialize a new project with the following commands:

$ mkdir trpc-blog && cd trpc-blog

$ npm init -y

$ npm i @trpc/server @trpc/client zod

$ npm i -D @cloudflare/workers-types @types/node typescript vite wrangler

After these commands, we will have a new Node.js project with all the required dependencies.

We also need configuration files for TypeScript and Wrangler, the Cloudflare Workers CLI.

Create a tsconfig.json with this code:

{

"compilerOptions": {

"target": "esnext",

"module": "esnext",

"lib": ["dom", "dom.iterable", "esnext"],

"skipLibCheck": true,

"strict": true,

"forceConsistentCasingInFileNames": true,

"noEmit": true,

"moduleResolution": "node",

"resolveJsonModule": true,

"esModuleInterop": true,

"isolatedModules": true,

"types": ["@cloudflare/workers-types"]

},

"include": ["**/*.ts"],

"exclude": ["node_modules"]

}

And a wrangler.toml with this code:

name = "trpc-api"

main = "modules/api.ts"

compatibility_date = "2022-05-01"

Implementing the Schema

The tRPC router just needs a validator function with the following signature:

(input: unknown) => input

This function has to check the input type, throw when the input has the wrong type or return the input when the type is correct. This simple interface allows us to use a validation library like Zod to make the construction of more complex schemas easier.

Let’s create a file at modules/schema.ts with this code:

import { z } from "zod"

const Id = z.number()

const Comment = z.object({

id: Id,

author: z.string(),

text: z.string(),

})

const Article = z.object({

id: Id,

author: z.string(),

title: z.string(),

text: z.string(),

comments: z.array(Comment),

})

const CommentForArticle = z.object({

articleId: Id,

comment: Comment.omit({ id: true }),

})

export const Schema = { Article, Comment, CommentForArticle, Id }

export type Article = z.infer<typeof Article>

export type Comment = z.infer<typeof Comment>

First, we create our validators for the models, and then we use Zod’s infer function to create static types from them. This way, our static types, and the validators are always in sync.

Implementing the tRPC Router

Next, we create a router that will handle our requests. Create a file at modules/blog.router.ts and add this implementation:

import { initTRPC } from "@trpc/server"

import { Schema } from "./schema"

import type { Article } from "./schema"

export type BlogRouter = typeof blogRouter

const fakeDb: Article[] = []

const { router, procedure } = initTRPC.create()

export const blogRouter = router({

createArticle: procedure

.input(Schema.Article.omit({ id: true, comments: true }))

.mutation(({ input }) => {

const newArticle: Article = {

...input,

id: fakeDb.length,

comments: [],

}

fakeDb.push(newArticle)

return newArticle

}),

listArticles: procedure.query(() => fakeDb),

readArticle: procedure.input(Schema.Id).query(({ input }) => fakeDb[input]),

updateArticle: procedure

.input(

Schema.Article.omit({ comments: true }).partial({

author: true,

title: true,

text: true,

})

)

.mutation(({ input }) => {

const article = fakeDb[input.id]

if (!article) throw new Error("Article not found")

fakeDb[input.id] = { ...article, ...input }

return fakeDb[input.id]

}),

deleteArticle: procedure.input(Schema.Id).mutation(({ input }) => {

const article = fakeDb[input]

if (!article) throw new Error("Article not found")

fakeDb.splice(input, 1)

return article

}),

createComment: procedure

.input(Schema.CommentForArticle)

.mutation(({ input }) => {

const article = fakeDb[input.articleId]

if (!article) throw new Error("Article not found")

const newComment = { ...input.comment, id: article.comments.length }

article.comments.push(newComment)

return newComment

}),

deleteComment: procedure.input(Schema.Id).mutation(({ input }) => {

const article = fakeDb.find((article) =>

article.comments.some((comment) => comment.id === input)

)

if (!article) throw new Error("Comment not found")

const comment = article.comments[input]

article.comments.splice(input, 1)

return comment

}),

})

The router uses the validators in the Schema to check that each route gets the right data; it also uses our manually inferred types explicitly where they can’t be inferred automatically by TypeScript.

For example, TypeScript would infer never[] for our comments field when creating a new article, leading to an error because the new article comes with an empty array for its comments field, which doesn’t allow TypeScript to infer the Comment type.

Implementing the API

The actual API implementation is straightforward. Create a new file at modules/api.ts and add the following code:

import { fetchRequestHandler } from "@trpc/server/adapters/fetch"

import { blogRouter } from "./blog.router"

export default {

async fetch(request: Request): Promise<Response> {

return fetchRequestHandler({

endpoint: "/api",

req: request,

router: blogRouter,

createContext: () => ({}),

})

},

}

We just call requestFetchHandler and let it pass all requests that hit the /api path to our router.

Implementing the Client

Finally, we create the client at modules/client.ts with this implementation:

import { createTRPCProxyClient, httpBatchLink, loggerLink } from "@trpc/client"

import type { BlogRouter } from "./blog.router"

import type { Article, Comment } from "./schema"

const proxy = createTRPCProxyClient<BlogRouter>({

links: [

loggerLink(),

httpBatchLink({ url: "<CLOUDFLARE_WORKER_URL>" }),

],

})

const newArticle: Article = await proxy.createArticle.mutate({

author: "Muhammad Ali",

title: "My Philosophy",

text: "Don't count the days; make the days count.",

})

const comment: Comment = await proxy.createComment.mutate({

articleId: newArticle.id,

comment: { author: "Fan", text: "You're truly the worlds greatest!" },

})

const article: Article = await proxy.readArticle.query(newArticle.id)

Here, we see the use of the import type statement in action. First, we import the inferred type of our router, and then we import the static schema types inferred from our validators.

The BlogRouter type ensures all our tRPC functions are statically typed, inputs, and outputs.

Since the outputs of all router functions are already correctly typed, the inferred schema types aren’t strictly needed, but when we import the BlogRouter type on the client, all non-basic type names will get lost. This means we get the correct structure but not the names. The static schema types give us a way to write concise code that handles the data from the server.

We will replace the <CLOUDFLARE_WORKER_URL> when we deploy the script.

Inferring Static Types from the Router

If the server doesn’t export the static schema types, we can infer them from the client. This requires independently defining the type names, but tRPC’s type structure will keep them in order.

An example of our types looks like this:

import type { inferRouterOutputs } from "@trpc/server"

type RouterOutputs = inferRouterOutputs<BlogRouter>

type Article = RouterOutputs["createArticle"]

type Comment = RouterOutputs["createComment"]

Again, this feature uses the import type statement to prevent server code from entering the compiled client JavaScript.

We will run the client in a browser, so we also need an index.html file with this code:

<!DOCTYPE html>

<title>Blog Client</title>

<script type="module" src="/modules/client.ts"></script>

Testing the API

You can test the API by adding these two scripts to your package.json file:

"scripts": {

"api": "wrangler publish",

"client": "vite --host"

},

Then run the commands. First, you deploy the API to Cloudflare with this command:

$ wrangler publish

It should then be available at this URL:

https://trpc-api.<YOUR_CLOUDFLARE_USERNAME>.workers.dev

Replace the <CLOUDFLARE_WORKER_URL> in modules/client.ts with the URL you see in your command line. Don’t forget to add the /api path at the end!

Then run Vite to start the client:

$ vite --host

If you open the logged URL in a browser, you should see the interactions with the API in the browser console.

The output should look something like that:

>> mutation #1 createArticle Object

loggerLink.mjs:53 << mutation #1 createArticle Object

client.ts:17 {author: 'Muhammad Ali', title: 'My Philosophy', text: "Don't count the days; make the days count.", id: 1, comments: Array(0)}

loggerLink.mjs:53 >> mutation #2 createComment Object

loggerLink.mjs:53 << mutation #2 createComment Object

client.ts:23 {author: 'Fan', text: "You're truly the worlds greatest!", id: 0}

loggerLink.mjs:53 >> query #3 readArticle Object

loggerLink.mjs:53 << query #3 readArticle Object

client.ts:26 {author: 'Muhammad Ali', title: 'My Philosophy', text: "Don't count the days; make the days count.", id: 1, comments: Array(1)}

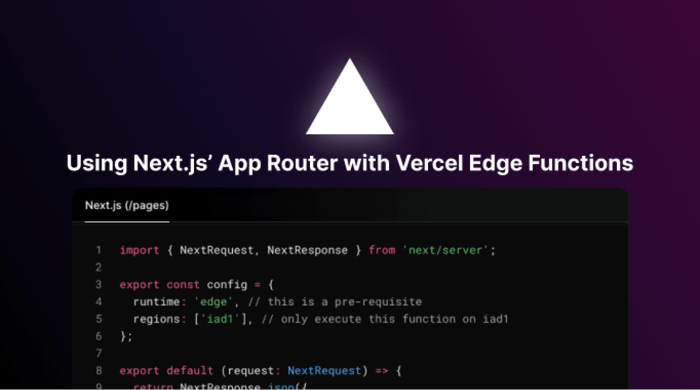

Integrating tRPC with React Query and Next.js

The main selling point of tRPC is a typed connection between APIs and their clients, so you probably want to integrate with a frontend, and you’re lucky because tRPC comes with first-class support for React-based frontends. The tRPC team supplies you with basic integration via TanStack Query (fka. React Query) and a higher-level integration for Next.js.

Next Steps

tRPC supports subscriptions via WebSockets, which allow you to keep your clients updated without constantly polling new data from the API. So, as a next step, you could try to add subscriptions to the example API. Remember that you need Cloudflare’s Durable Objects to facilitate inter-client communication. You can find an example of using WebSockets with Durable Objects on GitHub.

Summary

Building serverless applications can often lead to multiple small functions calling each other. To keep all of them in check, an API specification that comes with static typing is invaluable.

If you build your whole system with TypeScript, you can leverage its type system across service boundaries with tRPC without any code generation!

Thanks to TypeScript’s type imports, no API code will sneak into your clients.

Akava would love to help your organization adapt, evolve and innovate your modernization initiatives. If you’re looking to discuss, strategize or implement any of these processes, reach out to [email protected] and reference this post.