Table of Contents

Understand the role of WebAssembly and how you can integrate it in serverless development.

TL;DR

The way we build and manage applications has changed dramatically with the rise of cloud computing and serverless architectures. These technologies allow developers to focus on creating software without worrying about the underlying infrastructure, like servers and storage. However, as the demand for faster and more efficient applications continues to grow, there is a need to improve these serverless systems beyond their traditional capabilities.

One exciting advancement in this area is WebAssembly (often abbreviated as Wasm). Originally designed for enhancing web applications, WebAssembly is now being recognized for its potential to boost performance in serverless environments. It allows applications to run almost as quickly as they would natively on a computer, while also being lightweight and easy to manage.

The combination of serverless computing and WebAssembly is a significant step forward in developing cloud-based applications. While serverless systems simplify the process of deploying and scaling applications, they sometimes struggle with speed and resource use. WebAssembly offers a promising solution to these challenges, making it particularly effective for applications that need to perform well in various settings, whether in powerful cloud data centers or on smaller edge devices.

In this article, we'll look at how WebAssembly can be practically used in serverless environments. We will explore different techniques for optimizing performance, patterns for structuring these applications, and real-world examples of their use. This information will help developers understand how to successfully integrate WebAssembly into their serverless applications, ultimately leading to better performance and resource management.

What is Serverless Computing?

Serverless computing is a modern approach to building applications that lets developers focus on what matters: creating the software that runs their business. In a serverless model, developers don’t need to worry about managing servers or the underlying technology that makes their applications run. Instead, they can simply write and upload their code.

When someone interacts with the application—like clicking a button or sending a message—the necessary code runs automatically in the cloud. The cloud provider takes care of everything else, including making sure there are enough resources to handle the demand and maintaining the system.

This method is particularly helpful for tasks that don’t need to keep any information from one interaction to the next, which is often the case with events that trigger specific actions. Overall, serverless computing offers a smart and cost-effective way to launch and run applications on a larger scale, allowing businesses to adapt quickly without getting bogged down in technical details.

Core Concepts

Statelessness: Serverless functions operate independently, meaning each time they run, they don’t remember past activities. Any important data needed must be stored in separate locations like databases or cloud storage. While this approach makes scaling easier, developers need to be careful about managing data. For example, if a function processes a user request, it must fetch any necessary information from an external service since it can’t retain information from previous runs.

Auto-Scaling: One of the primary benefits of serverless architecture is its automatic scaling capability. Cloud providers dynamically allocate resources based on request volume, allowing applications to scale up during traffic spikes and down during low demand, thus eliminating manual infrastructure management and maintaining high responsiveness.

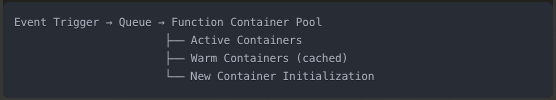

Horizontal scaling occurs automatically with concurrent request volume, as container instances are created and destroyed as needed. Additionally, the platform keeps a pool of "warm" containers to optimize response times and reduce latency during variable traffic.

Pay-Per-Use: In serverless computing architectures, the economic model is meticulously aligned with the volume of incoming requests and the execution time of functions. This "pay-as-you-go" approach leverages fine-grained billing practices to optimize resource utilization, ensuring that costs are incurred only for compute resources actively engaged in processing tasks. Consequently, this method significantly minimizes overhead by eliminating the need for pre-provisioned resources that may remain underutilized.

Event-Driven Architecture: Serverless architectures are fundamentally event-driven, utilizing triggers that execute functions in reaction to defined actions or states—such as HTTP requests, database modifications, or file uploads. This paradigm aligns well with microservices, where functions are designed for specific tasks, responding to discrete events. This enables a highly granular and modular approach to application design, facilitating scalability and maintainability in complex systems.

Benefits & Challenges

The serverless model provides several compelling benefits:

High Scalability: Serverless applications inherently support automatic scaling, allowing for seamless handling of large volumes of requests. Cloud providers manage resource allocation, ensuring applications adapt to changing demand without manual intervention.

Cost Efficiency: Because users are only billed for active execution time, serverless can be highly cost-effective, especially for applications with unpredictable or fluctuating workloads.

Despite these advantages, serverless computing also introduces specific challenges:

Limited Performance Options: Serverless functions are typically constrained by provider-imposed execution time limits, memory constraints, and cold start latency (initial startup time). These limitations can hinder applications with intensive, long-running tasks or real-time processing requirements.

Dependency on Cloud Providers: Serverless is inherently tied to specific cloud providers, making it challenging to achieve platform-agnostic deployments or avoid vendor lock-in. Additionally, serverless applications rely on the provider's security measures, architecture, and service integrations, which can limit customization.

The serverless paradigm redefines the demarcation of responsibilities between developers and infrastructure providers, prioritizing convenience and scalability over granular control. This abstraction layer enhances operational efficiency and developer productivity, but it also brings forth distinct technical challenges that necessitate careful consideration during solution architecture. A comprehensive grasp of these trade-offs is essential for effectively implementing serverless computing in production settings. By understanding these concepts, developers can critically assess the role of serverless architectures in their ecosystems, especially about performance optimizations using technologies like WebAssembly, which we will examine in the following sections.

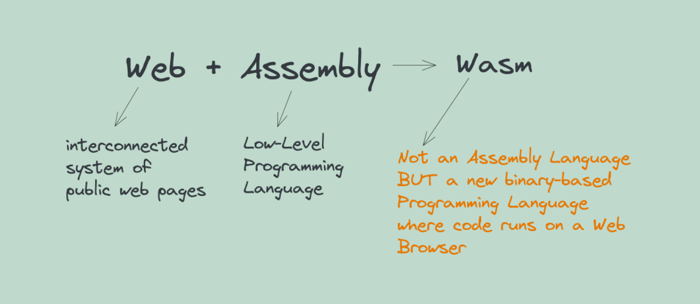

What is WebAssembly (Wasm)?

WebAssembly (Wasm) is a low-level binary instruction format optimized for delivering near-native execution speeds for applications in web browsers and beyond. Originally conceived to overcome JavaScript's limitations in high-performance scenarios, Wasm is now gaining traction as a robust cloud and edge computing solution, particularly in serverless architectures.

Wasm is designed as a compilation target for stack-based virtual machines, facilitating execution in a sandboxed environment while maintaining high performance. It enables efficient code generation from high-level languages such as Rust, C/C++, and Go, resulting in compact binaries executing near-native efficiency across diverse platforms. By offering a lightweight and portable runtime, WebAssembly ensures consistent performance, making it a prominent choice for developers seeking cross-platform compatibility and speed in modern application development.

Core Characteristics

Speed: WebAssembly’s binary encoding enables execution at speeds approaching that of native code. The design of Wasm is specifically optimized for both compilation and runtime performance, yielding significantly enhanced throughput compared to JavaScript and other interpreted languages. This performance edge positions Wasm as a premier option for high-performance applications, particularly in contexts like serverless and edge computing, where minimizing latency is imperative.

Cross-Platform Compatibility: WebAssembly (Wasm) binaries are engineered for portability, ensuring consistent execution across a variety of platforms—be it browsers, servers, or IoT devices—without necessitating code alterations. This inherent cross-platform capability facilitates the reuse of Wasm modules in diverse deployment scenarios, effectively minimizing redundancy and streamlining the development workflow.

Sandboxing: WebAssembly (Wasm) operates within a highly secure, sandboxed framework that enforces strict isolation from the host environment. This design prevents Wasm modules from directly interfacing with the underlying system, thereby establishing a robust security boundary that mitigates potential vulnerabilities. The sandboxing capability of WebAssembly is particularly advantageous in serverless architectures, where untrusted code can be executed alongside other applications on shared infrastructure without compromising security.

Languages & Tooling

WebAssembly’s versatility is supported by an expanding ecosystem of languages and tooling:

Supported Languages: Wasm is compatible with languages that compile to its binary format, including Rust, C/C++, and Go. Rust, in particular, has gained popularity due to its memory safety and performance characteristics, making it a natural fit for WebAssembly applications.

Toolchains and Libraries:

Emscripten: A robust toolchain that compiles C and C++ code to WebAssembly, Emscripten has long been a preferred tool for bringing performance-critical code to the web.

wasm-pack: This toolkit simplifies the process of building and packaging Rust code into WebAssembly modules. With wasm-pack, developers can seamlessly build Rust projects for WebAssembly and distribute them in a format compatible with both server and browser environments.

WebAssembly (Wasm) is a powerful tool for serverless architecture, enabling high-performance, language-agnostic code execution. Its design is tailored for resource-constrained environments, making it an excellent fit for serverless applications. With near-native speeds, robust security, and support for multiple programming languages, Wasm allows developers to create efficient, portable applications. As we will see later, it enhances serverless functions' performance and adds flexibility to edge computing, transforming workload deployment and scaling strategies.

Implementing WebAssembly in Serverless Architectures

Integrating WebAssembly (Wasm) into serverless architectures capitalizes on Wasm's efficiency, portability, and secure execution environment to develop high-performance and cost-effective applications. This section will detail the setup process for serverless platforms compatible with Wasm, followed by an advanced guide on authoring, compiling, and deploying WebAssembly modules effectively.

Selecting a Serverless Platform

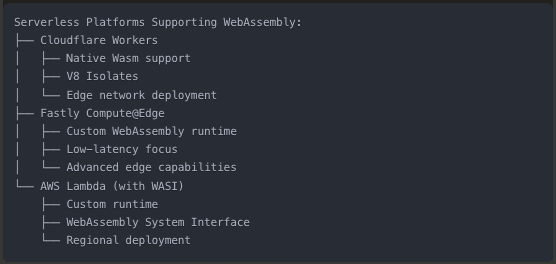

Several serverless platforms now support WebAssembly, each offering specific advantages for edge computing:

Cloudflare Workers: Cloudflare’s platform is fully compatible with WebAssembly (Wasm) and is optimized for edge computing scenarios. This architecture enables developers to run Wasm functions in proximity to end-users, significantly reducing latency and enhancing performance.

Fastly Compute@Edge: Fastly integrates WebAssembly (Wasm) support via the Wasmtime runtime, enabling developers to construct and deploy high-performance Wasm functions at the edge. This approach leverages advanced caching mechanisms and extensive customization options, facilitating the development of fast and resilient web applications optimized for performance and scalability.

AWS Lambda with WASI: AWS Lambda offers partial support for WebAssembly (Wasm) through the execution of WebAssembly System Interface (WASI)-compliant binaries. This capability allows for the integration of Wasm modules within serverless functions. However, it's important to note that this setup comes with certain constraints regarding execution environment compatibility when compared to standard Lambda functions.

Each platform offers unique advantages and trade-offs, so the choice depends on specific requirements such as global distribution, cold start performance, and integration needs.

Performance Optimization Techniques

To maximize the potential of WebAssembly in serverless architectures, it's crucial to implement performance optimization strategies. These strategies primarily target code size reduction, concurrency improvements, and efficient memory management. By focusing on these areas, we can significantly enhance the execution speed and overall efficiency of WebAssembly modules within serverless environments.

Code Optimization

Smaller Wasm binaries reduce load times, which is critical for serverless functions that often run in ephemeral environments. To reduce Wasm module size:

Use compiler optimizations: Flags like -Oz for Rust and C/C++ prioritize binary size reduction.

Remove unused code: Using tree-shaking tools or Rust’s wasm-opt can help strip out unused functions.

Simplify logic: Avoid complex operations that could be broken down into simpler instructions, minimizing the overall module footprint.

Optimize the code logic for Wasm to take advantage of the Just-In-Time (JIT) compilation used in many serverless platforms.

Inline frequently used functions to avoid call overhead.

Prefer simple loops and avoid heavy recursion when possible, as Wasm performance can degrade with deep call stacks.

Concurrency & Parallelism

Although WebAssembly itself has limited multi-threading capabilities, some platforms support threading through WebAssembly System Interface (WASI) or other extensions:

Async Processing: Use asynchronous programming for I/O-bound tasks, which are common in serverless functions (e.g., API calls, database interactions). Rust's async/await syntax or Go’s goroutines can be effective for handling multiple I/O operations concurrently.

Multi-threading: If the serverless platform and runtime support multi-threading, enable threading using Wasm’s threading API or wasm-bindgen in Rust. Multi-threading allows computation-heavy tasks to be parallelized, reducing execution time for operations that can run concurrently.

Atomic Operations: When using threads, ensure that any shared memory is managed with atomic operations to avoid race conditions. Libraries like std::sync::atomic in Rust provide a safe way to handle atomic memory operations in Wasm.

Memory Management

Efficient memory usage is crucial in serverless environments due to limited resources and the stateless nature of Wasm functions:

Allocate Memory Sparingly: Allocate only the memory required for each task, using explicit allocation and deallocation where possible. In Rust, this can be achieved by managing data structures with strict lifetimes and limiting allocations in tight loops.

Use Linear Memory Efficiently: WebAssembly uses a linear memory model, and resizing this memory can be costly. To avoid frequent reallocations:

Preallocate memory for predictable workloads.

Use memory pooling for repeated data structures (e.g., buffer pools) to avoid constant memory allocation and deallocation.

Avoid Memory Leaks: Due to Wasm’s low-level memory model, dangling pointers and memory leaks can degrade performance over time. In Rust, ownership and borrowing rules help manage memory safely, but manual tracking is often necessary for languages like C/C++.

Optimizing Wasm for serverless functions with these techniques will reduce response times, lower costs, and ensure that Wasm modules perform reliably under variable loads.

Challenges and Limitations

WebAssembly (Wasm) has gained significant traction in serverless and edge computing environments, but it is not without its challenges and limitations. An in-depth understanding of these obstacles is crucial for developers to foresee potential roadblocks and adopt effective strategies for mitigation.

Limited Ecosystem and Libraries

The WebAssembly ecosystem is in a nascent stage compared to more established environments like JavaScript or Python. This means:

Library Availability: Fewer pre-built libraries are available for Wasm, particularly for tasks like complex data processing, machine learning, or certain cryptographic functions. Many traditional libraries need adaptation to run efficiently in a Wasm environment.

Compatibility: Some existing libraries are not Wasm-compatible due to differences in memory models or lack of support for WebAssembly System Interface (WASI), which provides essential system-level calls for file handling, networking, and other I/O tasks.

These limitations can lead to increased development time, as developers may need to write custom code or find workarounds for missing libraries.

Concurrency Limitations

Concurrency is one of Wasm’s primary limitations, especially in serverless environments that rely on highly concurrent processes:

Lack of Full Multi-Threading: Though some serverless platforms support threading (e.g., through Wasm extensions or WASI), WebAssembly’s threading model remains limited and often unavailable on many platforms. This restricts Wasm’s ability to handle multi-threaded workloads, impacting applications that rely heavily on parallel processing for performance gains.

Atomic Operations and Shared Memory: Even when threading is supported, Wasm’s shared memory and atomic operations are still relatively constrained. This can hinder efficient thread synchronization and data sharing, which are crucial for high-performance, multi-threaded serverless applications.

For now, Wasm developers may need to rely on async functions and single-threaded approaches, which may not be as performant for CPU-bound tasks.

Debugging Complexity

Debugging WebAssembly is notably more complex than traditional serverless code, mainly due to:

Binary Format: Wasm’s low-level binary format can be challenging to interpret when debugging, as developers don’t have immediate access to high-level code. This requires developers to use tools to map Wasm binaries back to source code, which can be cumbersome.

Limited Debugging Tools: While some tools like Chrome DevTools and Wasm-specific debuggers exist, they are not yet as mature as debugging tools available for languages like JavaScript or Python. Tools like wasm-pack for Rust and wasm-opt provide some support, but debugging complex applications remains challenging.

Error Reporting: Error messages in Wasm tend to be lower-level, which can make diagnosing issues more time-consuming, especially when they originate from memory management issues or subtle bugs in low-level code.

The identified challenges underscore the critical areas for advancement within the WebAssembly ecosystem. As we see continued maturation of the technology, advancements in tooling, enhanced debugging capabilities, and improved support for concurrency are expected to mitigate many existing limitations. This progression will enhance the accessibility and robustness of WebAssembly for deploying serverless architectures.

Conclusion

WebAssembly (Wasm) is set to redefine performance and efficiency within serverless computing paradigms, particularly for cloud-native applications. Our exploration delved into the core principles of serverless architectures and Wasm, highlighting key attributes such as Wasm's impressive execution speed, robust cross-platform interoperability, and effective memory usage. Despite its promise, several challenges persist, including a limited ecosystem of libraries, constraints on concurrency, and the intricacies of debugging complex applications.

Looking forward, we anticipate significant advancements in Wasm tooling, enhanced multi-threading support, and a more vibrant ecosystem that will augment Wasm's applicability, particularly at the network edge where low latency execution is critical. These developments will facilitate the creation of high-performance, resource-efficient applications that can scale effortlessly within serverless infrastructures.

For developers eager to explore the frontiers of serverless architecture, engaging with WebAssembly presents both a formidable challenge and a forward-looking opportunity in cloud computing. With the maturation of Wasm technology, now is an ideal moment to delve into its diverse applications within serverless environments.

Akava would love to help your organization adapt, evolve and innovate your modernization initiatives. If you’re looking to discuss, strategize or implement any of these processes, reach out to [email protected] and reference this post.