Table of Contents

An in‐depth guide to Agentic AI and its architectural patterns.

As artificial intelligence continues to mature, a new paradigm is emerging—Agentic AI—which marks a decisive shift from passive, predictive models to systems capable of autonomous, goal-directed behavior. Unlike traditional machine learning models that return outputs in response to static prompts, agentic systems exhibit a richer, more interactive form of intelligence. These agents do not merely predict; they plan, act, and adapt in pursuit of defined objectives.

The growing relevance of agentic AI is driven by recent advances in large language models (LLMs), which now function as powerful reasoning engines at the core of these systems. In contrast to reactive virtual assistants like Siri or Alexa, agentic AI can deconstruct high-level goals into executable subtasks, interface with external tools (such as APIs or search engines), and maintain context through dynamic memory modules. This architecture unlocks a new class of applications from DevOps copilots that automate infrastructure workflows, to autonomous research agents capable of synthesizing information across domains, to self-prompting systems that iteratively refine their strategies without human intervention.

These capabilities signal a broader transformation: agentic AI is poised to bridge the gap between narrow, task-specific intelligence and more general-purpose autonomy. However, this shift also introduces new challenges. As systems become more autonomous, questions of alignment, safety, and ethical governance become increasingly urgent.

This article offers a deep exploration of agentic AI, from its core mechanics and architectural patterns to real-world applications and emerging risks.

Agentic vs. Reactive AI

Understanding the distinction between reactive and agentic AI is essential for grasping the trajectory of artificial intelligence development. This section explores their differences, presents a comparative analysis, and highlights the paradigm shift toward dynamic and collaborative systems.

Reactive AI operates primarily through input-output mapping, relying on predefined rules or learned models to generate specific responses. These systems excel in narrowly defined tasks but lack the adaptability and initiative necessary for more complex scenarios. For instance, calculators execute mathematical computations based strictly on user input, while spam filters classify emails using trained algorithms. Reactive AI is inherently limited by its inability to plan, adapt to novel contexts, or pursue objectives beyond immediate instructions, making it unsuitable for handling multi-step or dynamic processes.

In contrast, agentic AI is characterized by its proactive and goal-oriented approach. It possesses the capability to autonomously perceive its environment, define objectives, and decompose complex tasks into more manageable components. Agentic AI systems use external tools, such as APIs or search engines, and are capable of adapting based on feedback or newly acquired information. For example, an agentic AI might independently conduct research on a given topic, prioritize related subtasks, and implement a strategic plan, continuously learning from outcomes to optimize its methodologies. This level of autonomy and adaptability marks a significant advancement in AI capabilities, moving beyond static response mechanisms to a more intelligent and flexible framework.

Reactive AI | Agentic AI | |

Operation | Input-output mapping, rule-based | Goal-driven, proactive |

Autonomy | None, requires human input | High, operates independently |

Task Handling | Single-task, predefined responses | Multi-step, dynamic task decomposition |

Adaptability | Limited, static behavior | Adapts to new information |

Examples | Siri, basic chatbots, spam filters | Autonomous robots, multi-agent systems |

Examples

Reactive AI: Siri responds to voice commands with predefined actions, like setting reminders. Basic chatbots follow scripted responses, and spam filters classify emails without planning or context retention.

Agentic AI: Autonomous robots navigate warehouses, adjusting paths dynamically. Multi-agent systems, like AutoGPT, coordinate subtasks (e.g., research, writing) to achieve high-level goals.

The transition from reactive to agentic AI signifies a paradigm shift from monolithic, response-based architectures to intricate, cooperative frameworks. Reactive AI functions in a siloed manner, executing discrete tasks independently, while agentic AI leverages the integration of various specialized agents, sophisticated memory systems, and diverse tools to tackle multifaceted challenges. This advancement facilitates end-to-end automation, revolutionizing sectors such as logistics and customer service through systems capable of planning, reasoning, and acting in concert.

How Agentic AI Works

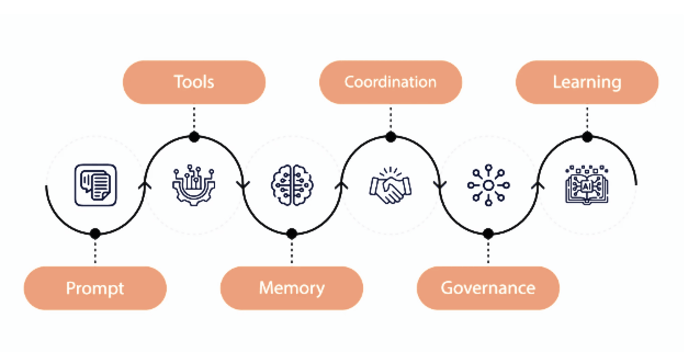

Agentic AI operates through a cyclical process: Perceive → Plan → Act → Learn. The system first perceives its environment using sensors, APIs, or data streams to gather relevant information. It then plans by generating a sequence of actions or subtasks to achieve its goal, often using reasoning algorithms or large language models (LLMs). The agent acts by executing these steps, interacting with tools or the environment, and learns from the outcomes to refine its strategy, ensuring adaptability and progress toward objectives.

Perceive → Plan → Act → Learn Loop

Perceive – The agent processes inputs (natural language, sensor data, or system signals).

Plan – It evaluates possible actions in the context of a defined goal, often by breaking tasks into subtasks.

Act – It performs actions, such as invoking tools, querying APIs, or prompting sub-agents.

Learn – It reflects on outcomes to improve future decisions (often via self-feedback loops rather than gradient updates).

This loop can repeat continuously or execute as a single cycle depending on the agent’s configuration and purpose.

Pretrained Models as Reasoning Engines

At the core of many agentic systems is a pretrained large language model (LLM)—such as GPT-4—which provides general-purpose reasoning, planning, and language generation. These models are trained on vast corpora of text and can simulate complex behavior when prompted correctly.

Prompt Engineering for Agency

Prompting plays a critical role in making LLMs behave like agents. Developers use structured prompts to:

Assign roles (“You are a research assistant.”)

Provide task descriptions and constraints

Encourage step-by-step reasoning or self-reflection

These engineered prompts guide the LLM to simulate planning, decision-making, and execution.

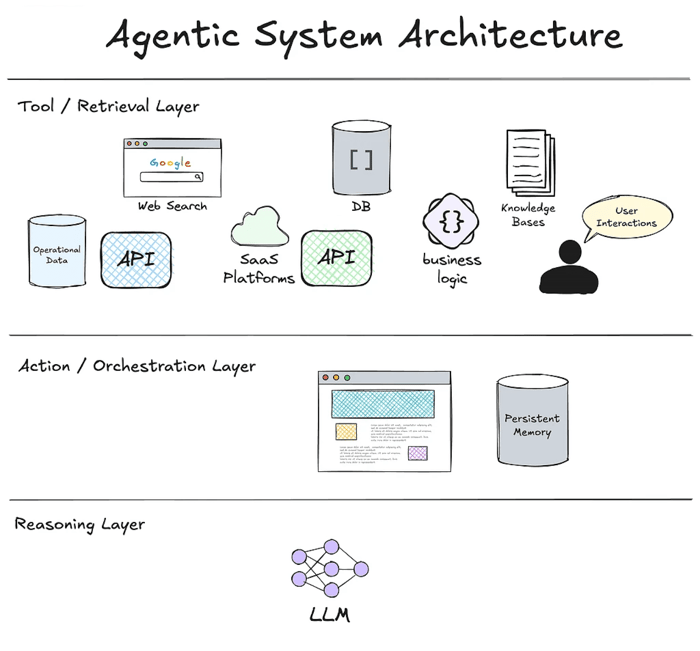

Tool Integration

Agentic AI becomes practical through integration with external tools—e.g., web search APIs, databases, code interpreters, or custom functions. These tools are invoked via structured outputs (e.g., JSON calls), enabling the agent to act beyond its pretraining. Developers define action schemas that map output formats to tool behaviors.

Memory Systems

To operate over long sequences, agentic systems often include memory layers. These can take the form of:

Vector stores (e.g., Pinecone, FAISS) for semantic retrieval

Structured databases for storing state

Scratchpads for contextual reasoning

This allows the agent to retain task context, avoid redundant steps, and reference past actions.

Limitations of Current Agentic AI

Despite the sophistication, today’s agentic systems are not fully autonomous. They:

Depend heavily on human-authored prompts and tool definitions

Require predefined goals and structured inputs

Cannot reliably learn or adapt their own architecture or long-term behavior

Ultimately, Agentic AI is a powerful orchestration of LLM reasoning, developer-defined logic, and tool augmentation—but it remains bounded by the scaffolding humans provide.

Architectures and Frameworks in Agentic AI

Building an effective agentic system requires a careful selection of architectural patterns and supporting frameworks that govern how agents reason, act, store information, and collaborate. This section explores the key elements of modern agent architectures, the orchestration tools that support them, and the mechanisms that allow agents to coordinate and retain context over time.

Agent Architectures

At a foundational level, agentic systems are designed as either mono-agent or multi-agent architectures:

Mono-agent systems consist of a single LLM-driven agent that performs task planning, tool invocation, and memory handling independently. These are simpler to build and manage but limited in scope and parallelization.

Multi-agent systems distribute responsibilities across multiple specialized agents—e.g., planners, executors, memory managers—working in collaboration. This approach supports more complex workflows, task delegation, and scalability.

Several open frameworks illustrate this diversity:

ReAct combines reasoning and action by interleaving thought processes and tool use.

AutoGPT automates end-to-end goal execution through iterative planning and self-prompting loops.

BabyAGI simplifies task execution using a task queue, prioritizer, and memory loop.

CrewAI introduces role-based multi-agent collaboration.

LangGraph enables stateful agents with graph-based workflows for complex branching logic.

Orchestration Frameworks

To streamline agent development and execution, orchestration frameworks provide modular components for prompt design, tool integration, memory handling, and output parsing:

LangChain supports agent toolchains and prompt templates with extensive ecosystem integrations.

LangGraph extends LangChain with graph-based execution for branching, memory persistence, and agent loops.

Haystack focuses on RAG-based pipelines and document processing.

Semantic Kernel by Microsoft emphasizes planner-based execution, skill abstraction, and cross-platform compatibility.

Memory Management

Effective memory handling is critical to Agentic AI, enabling agents to maintain continuity and relevance across interactions:

Working memory stores short-term context relevant to the current task or conversation.

Long-term memory archives episodic knowledge, historical interactions, or previously encountered data.

These are often implemented using vector stores that enable semantic search and retrieval:

Pinecone, Weaviate, and FAISS are common backends used to store and retrieve embeddings for persistent memory access.

Agent Coordination and Collaboration

In multi-agent systems, agents must coordinate effectively:

Role-based collaboration allows agents to specialize (e.g., planner, executor, validator) and operate within defined scopes. A manager-worker pattern is frequently employed, where a central controller assigns subtasks to sub-agents.

Message-passing systems enable agents to exchange information asynchronously or synchronously. This can be orchestrated via shared memory, task queues, or direct message interfaces to facilitate distributed reasoning and negotiation.

As agentic systems scale, these architectural patterns and frameworks become essential for building robust, extensible, and context-aware intelligent agents capable of executing complex goals with minimal human oversight.

Technical Implementation Considerations for Agentic AI

Deploying Agentic AI in production environments involves a nuanced combination of prompt design, tool integration, state persistence, and system interoperability. These components ensure agents behave reliably, maintain contextual awareness, and interface effectively with external systems. Below is a breakdown of key implementation domains critical to engineering robust agentic systems.

Prompt Engineering and Behavior Tuning

The behavior of LLM-powered agents is primarily dictated by how they are prompted. Effective prompt engineering simulates agency through structured language cues and constraints:

System prompts define the agent's role, tone, and behavioral boundaries (e.g., "You are a project management assistant that prioritizes clarity and brevity").

Personas can be applied to shape reasoning styles or domain expertise (e.g., researcher, lawyer, developer).

Task conditioning involves using templated instructions and input constraints to guide how agents plan and respond across varied contexts.

Iterative tuning, few-shot examples, and chain-of-thought prompting are frequently used to induce consistent multi-step reasoning.

Tool/Function Calling

Agents gain real-world utility through tool use, allowing them to perform tasks beyond their pretrained knowledge:

OpenAI function calling lets developers define structured functions (e.g., search_web(query)) that the model can invoke when relevant.

Google Vertex AI agents support similar capabilities via extensions and toolchains.

HuggingFace Agents offer modular tool registries where language models can execute Python functions, query APIs, or interact with files.

These tools extend agent capabilities from passive generation to active execution.

State Management and Persistence

Agents operating in long or multi-turn sessions require state management to preserve context, task progress, and memory:

State persistence can involve storing current goals, past interactions, or tool outputs in structured formats (JSON, Redis, PostgreSQL).

Caching strategies reduce redundant computation, especially for expensive API calls or repeated queries.

Replay logs can capture full execution traces, aiding debugging, testing, and auditability.

APIs, Plugins, and Integration Patterns

Agentic systems often act as orchestrators across diverse ecosystems. Integration is typically achieved through:

APIs: REST or GraphQL interfaces for accessing third-party data or performing actions (e.g., CRM updates, weather queries).

Plugins: Domain-specific extensions or connectors (e.g., Zapier integrations, browser control plugins).

Shell/database access: For more advanced agents, secure sandboxed execution environments allow for file manipulation, shell scripting, or SQL operations.

Developers must ensure agents interact with external systems securely, with proper authentication, error handling, and fallback logic.

Together, these implementation domains create the foundation for scalable, interactive agentic applications that can reason, act, and adapt within complex digital environments.

Challenges and Limitations of Agentic AI

While Agentic AI offers transformative capabilities, it also presents a complex set of technical and operational challenges. These limitations, ranging from unpredictable system behavior to security vulnerabilities and performance bottlenecks, must be actively addressed to ensure safe, reliable, and scalable deployment.

Goal Misalignment and Hallucinations

Agentic systems often struggle with goal misalignment, where the agent interprets its objective differently from the human’s intent. This stems from:

Ambiguous or underspecified prompts

Overreliance on the model's internal reasoning without sufficient guardrails

Additionally, agents based on large language models are susceptible to hallucinations, producing confident yet incorrect outputs, especially during tool invocation, summarization, or multi-hop reasoning. In an agent-based environment, these errors can spread across tasks, exacerbating failures.

Memory Drift and Data Contamination

Agents that use persistent memory systems (e.g., vector stores) risk memory drift, where irrelevant or outdated information begins influencing current behavior. Over time, agents may draw from contaminated contexts, leading to:

Redundant or contradictory outputs

Repetition of mistakes from earlier sessions

Conceptual confusion in long-running agents

Careful memory hygiene, data pruning, and scoped retrieval mechanisms are required to mitigate these effects.

Scalability and Latency in Multi-step Planning

Agentic workflows often involve multiple reasoning cycles, tool invocations, and memory lookups. This introduces:

Latency from sequential LLM calls and I/O operations

Scalability constraints when coordinating large multi-agent systems or high-throughput tasks

Techniques such as parallel execution, function caching, and hierarchical task batching are needed to optimize performance at scale.

Security and Sandbox Concerns

Agents capable of shell access, file manipulation, or API calls pose serious security risks. Without strict sandboxing and permission control, malicious prompts or tool misuse can:

Exfiltrate sensitive data

Corrupt systems or files

Trigger unintended external actions

Security audits, fine-grained permissions, and rate-limiting must be enforced for all agent-executed functions.

Evaluation and Trustworthiness

Evaluating Agentic AI is non-trivial. Unlike single-turn LLM outputs, agents exhibit:

Emergent behaviors over multiple steps

Unpredictable tool use paths

Divergent strategies for the same task

Standard metrics (e.g., accuracy or BLEU) are insufficient. Evaluation must include:

Goal completion rates

Human-in-the-loop assessments

Behavioral audits across varied scenarios

Establishing trust in agentic systems requires continuous monitoring, logging, and interpretability tooling.

In summary, while Agentic AI offers powerful new paradigms, its real-world adoption requires careful consideration of its limitations. Balancing capability and control will be essential for its responsible development.

Conclusion

Agentic AI represents a significant evolution in artificial intelligence, moving beyond static and reactive models to dynamic systems that can independently reason, act with specific goals, and continuously interact with tools and memory. Built upon advanced language models like GPT-4, these agentic systems simulate intelligence not merely by predicting responses but by orchestrating complex sequences of perception, planning, execution, and adaptation.

The future of agentic AI is promising, with the potential to revolutionize industries through comprehensive automation in logistics, healthcare, and beyond. Advances in large language models (LLMs), multi-agent systems, and tool integration will help to create systems that closely approximate general intelligence. However, to achieve truly autonomous agents, we must overcome technical challenges such as continuous learning and robust reasoning in unpredictable environments.

Akava would love to help your organization adapt, evolve and innovate your modernization initiatives. If you’re looking to discuss, strategize or implement any of these processes, reach out to [email protected] and reference this post.